Information retrieval

Information retrieval is defined as "a branch of computer or library science relating to the storage, locating, searching, and selecting, upon demand, relevant data on a given subject."[1] As noted by Carl Sagan, "human beings have, in the most recent few tenths of a percent of our existence, invented not only extra-genetic but also extrasomatic knowledge: information stored outside our bodies, of which writing is the most notable example."[2] The benefits of enhancing personal knowledge with retrieval of extrasomatic knowledge or transactive memory have been shown in comparisons with rote memory.[3][4]

Although information retrieval is usually thought of being done by computer, retrieval can also be done by humans for other humans.[5] In addition, some Internet search engines such as mahalo.com and http://www.chacha.com/ may have human supervision or editors.

Some Internet search engines such http://www.deeppeep.org and http://www.deepdyve.com/ as attempt to index the Deep Web which is web pages that are not normally public.[6]

The usefulness of a search engine has been proposed to be:[7]

Classification by user purpose

Information retrieval can be divided into information discovery, information recovery, and information awareness.[8]

Information discovery

Information discovery is searching for information that the searcher has not seen before and the searcher does not know for sure that the information exists. Information discovery includes searching in order to answer a question at hand, or searching for a topic without a specific question in order to improve knowledge of a topic.

Information recovery

Information recovery is searching for information that the searcher has seen before and knows to exist.

Information awareness

Information awareness has also been described as "'systematic serendipity' - an organized process of information discovery of that which he [the searcher] did not know existed".[8] Information awareness can be further divided into:[9]

- Information familiarity

- Knowledge acquisition (or called recollection) is the ability to apply the new knowledge.

Examples of information awareness prior to the Internet include reading print and online periodicals. With the Internet, new methods include email newsletters[10], email alerts, and RSS feeds.[11]

These methods may increase information familiarity.[9]

Classification by indexing methods used

Document retrieval

Models for information retrieval of documents are based on either the text of the document or links to and from the document and other documents.[12]

Models based on analysis of the text are the boolean, vector, and probabilistic.[12]

Models based on analysis of the links include PageRank, HITS, and impact factor.[12]

Boolean (set theoretic, exact matching)

Variants of the boolean model include:[12]

- Fuzzy logic (used with thesauri)

- Extended boolean

Vector space model (relevancy, algebraic, partial match, ranking)

Relevancy is determined by weighting concept i in a document j by (tf-idf weighting):[13]

Variants of the vector space model include:[12]

- Generalized vector space model (allows for correlated search terms)

- Latent semantic indexing model (allows for search for synonymous concepts rather than literal search terms)

- Neural network model

Probabilistic (Bayes)

Variants of the probabilistic model include:[12]

- Inference network

- Belief network

Analysis of links

Factors associated with unsuccessful retrieval

The field of medicine provides much research on the difficulties of information retrieval. Barriers to successful retrieval include:

- Lack of prior experience with the information retrieval system being used[14][3]

- Low visual spatial ability[14]

- Poor formulation of the question to be searched[15]

- Difficulty designing a search strategy when multiple resources are available[15]

- "Uncertainty about how to know when all the relevant evidence has been found so that the search can stop"[15]

- Difficulty synthesizing an answer across multiple documents[15]

Factors associated with successful retrieval

Characteristics of how the information is stored

For storage of text content, the quality of the index to the content is important. For example, the use of stemming, or truncating, words by removing suffixes may help.[16]

Display of information

Information that is structured may be more effective according to controlled studies.[17][18] In addition, the structure should be layered with a summary of the content being the first layer that the readers sees.[19] This allows the reader to take only an overview, or choose more detail. Some Internet search engines such as http://www.kosmix.com/ try to organize search results beyond a one dimensional list of results.

Regarding display of results from search engines, an interface designed to reduce anchoring and order bias may improve decision making.[20]

Characteristics of the search engine

John Battelle has described features of the perfect search engine of the future.[21] For example, the use of Boolean searching may not be as efficient.[22] Meta-searching and task based searching may improve decision velocity.[23]

Meta-search

Meta-search engines search multiple resources and integrate the results for the user. Examples in health care include Trip Database, MacPLUS, and QuickClinical.

Characteristics of the searcher

In healthcare, searchers are more likely to be successful if their answer is answer before searching, they have experience with the system they are searching, and they have a high spatial visualization score.[14] Also in healthcare, physicians with less experience are more likely to want more information.[24] Physicians who report stress when uncertain are more likely to search textbooks than source evidence.[25]

In healthcare, using expert searchers on behalf of physicians led to increased satisfaction by the physicians with the search results.[26]

Impact of information retrieval

The benefits of enhancing personal knowledge with retrieval of extrasomatic knowledge has been shown in a controlled comparison with rote memory.[3]

Various before and after comparisons are summarized in the tables.

| Search engine | Users | Questions | Portion of answers correct | Portion of answers that moved from correct to incorrect | |

|---|---|---|---|---|---|

| Before searching | After searching | ||||

| Quick Clinical[28][23] (federated search) |

73 practicing doctors and clinical nurse consultants | Eight clinical questions 600 total responses |

37% | 50% | 7% |

| User's own choice[27] | 23 primary care physicians | 2 questions from a pool of 23 clinical questions from Hersh[14] 46 total responses |

39% | 42% | 11% |

| OVID[14] | 45 senior medical students (data available for nursing students) | 5 questions from a pool of 23 clinical questions from Hersh[14] 324 total responses |

32% | 52% | 13% |

| Searches | Frequency useful information found | Frequency changed care | |

|---|---|---|---|

| Crowley[29] | 625 self-initiated searches | 83% | 39% |

| Rochester study[30] | 80% | ||

| Chicago study | 74% |

Critical incident studies can also document impact of information retrieval.[32][33]

Evaluation of the quality of information retrieval

Various methods exist to evaluate the quality of information retrieval.[34][35][36] Hersh[35] noted the classification of evaluation developed by Wancaster and Warner[34] in which the first level of evaluation is:

- Costs/resources consumed in learning and using a system

- Time needed to use the system[37]

- Quality of the results.

- Coverage. An estimated of coverage can be crudely automated.[38] However, more accurate judgment of relevance requires a human judge which introduces subjectivity.[39]

- Precision and recall

- Novelty. This has been judged by independent reviewers.[40]

- Completeness and accuracy of results. An easy method of assessing this is to let the searcher make a subjective assessment.[29][41][42][43] Other methods may be to use a bank of questions with known target documents[44] or known answers[14][27].

- Usage

- Self-reported

- Measured[37]

Precision and recall

Recall is the fraction of relevant documents that are successfully retrieved. This is the same as sensitivity. The recall has also been called the "yield."[45]

Precision is the fraction of retrieved documents that are relevant to the search. This is the same as positive predictive value.

F1 is the unweighted harmonic mean of the recall and precision.[36][46]

Number needed to read

The number Needed to Read (NNR) is "how many papers in a journal have to be read to find one of adequate clinical quality and relevance."[47][48][49][50] Of note, the NNR has been proposed as a metric to help libraries to decide which journals to subscribe to.[47] The NNR has also been called the "burden."[45]

Number needed to search

The humber needed to search (NNS) is the number of questions that would have to be searched for one question to be well answered.[40]

Hit curve

A hit curve is the number of relevant documents retrieved among the first n results.[51][52]

Decision velocity

Time need to answer a question can be compared between two systems with a Kaplan-Meir survival analysis method.[23]

In health care, difficult questions make take hours to answer.[53]

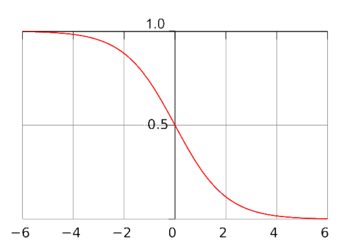

If the correct answer to the search question is known, a logistic function can model rate of correct answers over time. The result is an S-curve (also called sigmoid curve or logistic growth curve) in which most questions are answered after an initial delay; however, a minority of questions take a much longer time.

References

- ↑ National Library of Medicine. Information Storage and Retrieval. Retrieved on 2007-12-12.

- ↑ Sagan, Carl (1993). The Dragons of Eden: Speculations on the Evolution of Human Intelligence. New York: Ballantine Books. ISBN 0-345-34629-7.

- ↑ 3.0 3.1 3.2 de Bliek R, Friedman CP, Wildemuth BM, Martz JM, Twarog RG, File D (1994). "Information retrieved from a database and the augmentation of personal knowledge". J Am Med Inform Assoc 1 (4): 328–38. PMID 7719819. [e]

Cite error: Invalid

<ref>tag; name "pmid7719819" defined multiple times with different content Cite error: Invalid<ref>tag; name "pmid7719819" defined multiple times with different content - ↑ Sparrow, Betsy; Jenny Liu, Daniel M. Wegner (2011-07-14). "Google Effects on Memory: Cognitive Consequences of Having Information at Our Fingertips". Science. DOI:10.1126/science.1207745. Retrieved on 2011-07-16. Research Blogging.

- ↑ Mulvaney, S. A., Bickman, L., Giuse, N. B., Lambert, E. W., Sathe, N. A., & Jerome, R. N. (2008). A randomized effectiveness trial of a clinical informatics consult service: impact on evidence-based decision-making and knowledge implementation, J Am Med Inform Assoc, 15(2), 203-211. doi: 10.1197/jamia.M2461.

- ↑ Wright A. (2009) Exploring a ‘Deep Web’ That Google Can’t Grasp. New York Times.

- ↑ Shaughnessy AF, Slawson DC, Bennett JH (November 1994). "Becoming an information master: a guidebook to the medical information jungle". J Fam Pract 39 (5): 489–99. PMID 7964548. [e]

- ↑ 8.0 8.1 Garfield, E. “ISI Eases Scientists’ Information Problems: Provides Convenient Orderly Access to Literature,” Karger Gazette No. 13, pg. 2 (March 1966). Reprinted as “The Who and Why of ISI,” Current Contents No. 13, pages 5-6 (March 5, 1969), which was reprinted in Essays of an Information Scientist, Volume 1: ISI Press, pages 33-37 (1977). http://www.garfield.library.upenn.edu/essays/V1p033y1962-73.pdf

- ↑ 9.0 9.1 Tanna GV, Sood MM, Schiff J, Schwartz D, Naimark DM (2011). "Do E-mail Alerts of New Research Increase Knowledge Translation? A "Nephrology Now" Randomized Control Trial.". Acad Med 86 (1): 132-138. DOI:10.1097/ACM.0b013e3181ffe89e. PMID 21099399. Research Blogging.

- ↑ Roland M. Grad et al., “Impact of Research-based Synopses Delivered as Daily email: A Prospective Observational Study,” J Am Med Inform Assoc (December 20, 2007), http://www.jamia.org/cgi/content/abstract/M2563v1 (accessed December 21, 2007).

- ↑ Koerner B (2008). Algorithms Are Terrific. But to Search Smarter, Find a Person. Wired Magazine. Retrieved on 2008-04-04.

- ↑ 12.0 12.1 12.2 12.3 12.4 12.5 Berthier Ribeiro-Neto; Ricardo Baeza-Yates; Ribeiro, Berthier de Araújo Neto (2009). Modern information retrieval. Boston: Addison-Wesley. ISBN 0-321-41691-0.

- ↑ Hersh, William R (2001). “Information Retrieval Systems”, Fagan, Lawrence Marvin; Shortliffe, Edward Hance; Perreault, Leslie E.; Wiederhold, Gio: Medical Informatics: Computer Applications in Health Care and Biomedicine. Berlin: Springer, 549. ISBN 0-387-98472-0.

- ↑ 14.0 14.1 14.2 14.3 14.4 14.5 14.6 14.7 Hersh WR, Crabtree MK, Hickam DH, et al (2002). "Factors associated with success in searching MEDLINE and applying evidence to answer clinical questions". J Am Med Inform Assoc 9 (3): 283–93. PMID 11971889. PMC 344588. [e]

Cite error: Invalid

<ref>tag; name "pmid11971889" defined multiple times with different content Cite error: Invalid<ref>tag; name "pmid11971889" defined multiple times with different content Cite error: Invalid<ref>tag; name "pmid11971889" defined multiple times with different content - ↑ 15.0 15.1 15.2 15.3 Ely JW, Osheroff JA, Ebell MH, et al (March 2002). "Obstacles to answering doctors' questions about patient care with evidence: qualitative study". BMJ 324 (7339): 710. PMID 11909789. PMC 99056. [e]

- ↑ Porter MF. An algorithm for suffix stripping. Program. 1980;14:130–7.

- ↑ Schwartz LM, Woloshin S, Welch HG (2007). "The drug facts box: providing consumers with simple tabular data on drug benefit and harm.". Med Decis Making 27 (5): 655-62. DOI:10.1177/0272989X07306786. PMID 17873258. Research Blogging.

- ↑ Beck AL, Bergman DA (September 1986). "Using structured medical information to improve students' problem-solving performance". J Med Educ 61 (9 Pt 1): 749–56. PMID 3528494. [e]

- ↑ Nielsen J (1996). Writing Inverted Pyramids in Cyberspace (Alertbox). Retrieved on 2007-12-12.

- ↑ Lau AY, Coiera EW (October 2008). "Can cognitive biases during consumer health information searches be reduced to improve decision making?". J Am Med Inform Assoc. DOI:10.1197/jamia.M2557. PMID 18952948. Research Blogging.

- ↑ John Battelle. The Search: How Google and Its Rivals Rewrote the Rules of Business and Transformed Our Culture. Portfolio Trade. ISBN 1-59184-141-0.

- ↑ Verhoeff, J (2001). Inefficiency of the use of Boolean functions for information retrieval system. Communications of the ACM. 1961;4:557 DOI:10.1145/366853.366861

- ↑ 23.0 23.1 23.2 23.3 Coiera E, Westbrook JI, Rogers K (2008 Sep-Oct). "Clinical decision velocity is increased when meta-search filters enhance an evidence retrieval system.". J Am Med Inform Assoc 15 (5): 638-46. DOI:10.1197/jamia.M2765. PMID 18579828. PMC PMC2528038. Research Blogging.

Cite error: Invalid

<ref>tag; name "pmid18579828" defined multiple times with different content Cite error: Invalid<ref>tag; name "pmid18579828" defined multiple times with different content - ↑ Gruppen LD, Wolf FM, Van Voorhees C, Stross JK (1988). "The influence of general and case-related experience on primary care treatment decision making". Arch. Intern. Med. 148 (12): 2657–63. PMID 3196128. [e]

- ↑ McKibbon KA, Fridsma DB, Crowley RS (2007). "How primary care physicians' attitudes toward risk and uncertainty affect their use of electronic information resources". J Med Libr Assoc 95 (2): 138–46, e49–50. DOI:10.3163/1536-5050.95.2.138. PMID 17443246. Research Blogging.

- ↑ Shelagh A. Mulvaney et al., “A Randomized Effectiveness Trial of a Clinical Informatics Consult Service: Impact on Evidence Based Decision-Making and Knowledge Implementation,” J Am Med Inform Assoc (December 20, 2007), http://www.jamia.org/cgi/content/abstract/M2461v1 (accessed December 21, 2007).

- ↑ 27.0 27.1 27.2 McKibbon KA, Fridsma DB (2006). "Effectiveness of clinician-selected electronic information resources for answering primary care physicians' information needs". J Am Med Inform Assoc 13 (6): 653–9. DOI:10.1197/jamia.M2087. PMID 16929042. PMC 1656967. Research Blogging.

- ↑ 28.0 28.1 Westbrook JI, Gosling AS, Coiera EW (2005). "The impact of an online evidence system on confidence in decision making in a controlled setting". Med Decis Making 25 (2): 178–85. DOI:10.1177/0272989X05275155. PMID 15800302. Research Blogging.

- ↑ 29.0 29.1 29.2 Crowley SD, Owens TA, Schardt CM, et al (March 2003). "A Web-based compendium of clinical questions and medical evidence to educate internal medicine residents". Acad Med 78 (3): 270–4. PMID 12634206. [e]

- ↑ 30.0 30.1 Marshall JG (April 1992). "The impact of the hospital library on clinical decision making: the Rochester study". Bull Med Libr Assoc 80 (2): 169–78. PMID 1600426. PMC 225641. [e]

- ↑ King DN (October 1987). "The contribution of hospital library information services to clinical care: a study in eight hospitals". Bull Med Libr Assoc 75 (4): 291–301. PMID 3450340. PMC 227744. [e]

- ↑ Westbrook JI, Coiera EW, Sophie Gosling A, Braithwaite J (2007 Feb-Mar). "Critical incidents and journey mapping as techniques to evaluate the impact of online evidence retrieval systems on health care delivery and patient outcomes.". Int J Med Inform 76 (2-3): 234-45. DOI:10.1016/j.ijmedinf.2006.03.006. PMID 16798071. Research Blogging.

- ↑ Lindberg DA, Siegel ER, Rapp BA, Wallingford KT, Wilson SR (1993 Jun 23-30). "Use of MEDLINE by physicians for clinical problem solving.". JAMA 269 (24): 3124-9. PMID 8505815.

- ↑ 34.0 34.1 Lancaster, Frederick Wilfrid; Warner, Amy J. (1993). Information retrieval today. Arlington, Va: Information Resources Press. ISBN 0-87815-064-1.

- ↑ 35.0 35.1 Hersh, William R. (2008). Information Retrieval: A Health and Biomedical Perspective (Health Informatics). Berlin: Springer. ISBN 0-387-78702-X. Google books

- ↑ 36.0 36.1 Trevor Strohman; Croft, Bruce; Donald Metzler (2009). Search Engines: Information Retrieval in Practice. Harlow: Addison Wesley. ISBN 0-13-607224-0.

- ↑ 37.0 37.1 Cabell CH, Schardt C, Sanders L, Corey GR, Keitz SA (December 2001). "Resident utilization of information technology". J Gen Intern Med 16 (12): 838–44. PMID 11903763. PMC 1495306. [e]

- ↑ Fenton SH, Badgett RG (July 2007). "A comparison of primary care information content in UpToDate and the National Guideline Clearinghouse". J Med Libr Assoc 95 (3): 255–9. DOI:10.3163/1536-5050.95.3.255. PMID 17641755. PMC 1924927. Research Blogging.

- ↑ Hersh WR, Buckley C, Leone TJ, Hickam DH, OHSUMED: An interactive retrieval evaluation and new large test collection for research, Proceedings of the 17th Annual ACM SIGIR Conference, 1994, 192-201.

- ↑ 40.0 40.1 Lucas BP, Evans AT, Reilly BM, et al (May 2004). "The impact of evidence on physicians' inpatient treatment decisions". J Gen Intern Med 19 (5 Pt 1): 402–9. DOI:10.1111/j.1525-1497.2004.30306.x. PMID 15109337. PMC 1492243. Research Blogging.

Cite error: Invalid

<ref>tag; name "pmid15109337" defined multiple times with different content - ↑ Ely JW, Osheroff JA, Chambliss ML, Ebell MH, Rosenbaum ME (2005). "Answering physicians' clinical questions: obstacles and potential solutions". J Am Med Inform Assoc 12 (2): 217–24. DOI:10.1197/jamia.M1608. PMID 15561792. PMC 551553. Research Blogging.

- ↑ Gorman P (2001). "Information needs in primary care: a survey of rural and nonrural primary care physicians". Stud Health Technol Inform 84 (Pt 1): 338–42. PMID 11604759. [e]

- ↑ Alper BS, Stevermer JJ, White DS, Ewigman BG (November 2001). "Answering family physicians' clinical questions using electronic medical databases". J Fam Pract 50 (11): 960–5. PMID 11711012. [e]

- ↑ Haynes RB, McKibbon KA, Walker CJ, Ryan N, Fitzgerald D, Ramsden MF (January 1990). "Online access to MEDLINE in clinical settings. A study of use and usefulness". Ann. Intern. Med. 112 (1): 78–84. PMID 2403476. [e]

- ↑ 45.0 45.1 Wallace BC, Trikalinos TA, Lau J, Brodley C, Schmid CH (2010). "Semi-automated screening of biomedical citations for systematic reviews.". BMC Bioinformatics 11: 55. DOI:10.1186/1471-2105-11-55. PMID 20102628. PMC PMC2824679. Research Blogging.

- ↑ Uzuner O, Solti I, Cadag E (2010). "Extracting medication information from clinical text.". J Am Med Inform Assoc 17 (5): 514-8. DOI:10.1136/jamia.2010.003947. PMID 20819854. PMC PMC2995677. Research Blogging.

- ↑ 47.0 47.1 Toth B, Gray JA, Brice A (2005). "The number needed to read-a new measure of journal value". Health Info Libr J 22 (2): 81–2. DOI:10.1111/j.1471-1842.2005.00568.x. PMID 15910578. Research Blogging.

- ↑ McKibbon KA, Wilczynski NL, Haynes RB (2004). "What do evidence-based secondary journals tell us about the publication of clinically important articles in primary healthcare journals?". BMC Med 2: 33. DOI:10.1186/1741-7015-2-33. PMID 15350200. Research Blogging.

- ↑ Bachmann LM, Coray R, Estermann P, Ter Riet G (2002). "Identifying diagnostic studies in MEDLINE: reducing the number needed to read". J Am Med Inform Assoc 9 (6): 653–8. PMID 12386115. [e]

- ↑ Haase A, Follmann M, Skipka G, Kirchner H (2007). "Developing search strategies for clinical practice guidelines in SUMSearch and Google Scholar and assessing their retrieval performance". BMC Med Res Methodol 7: 28. DOI:10.1186/1471-2288-7-28. PMID 17603909. Research Blogging.

- ↑ Herskovic JR, Iyengar MS, Bernstam EV (2007). "Using hit curves to compare search algorithm performance". J Biomed Inform 40 (2): 93–9. DOI:10.1016/j.jbi.2005.12.007. PMID 16469545. Research Blogging.

- ↑ Bernstam EV, Herskovic JR, Aphinyanaphongs Y, Aliferis CF, Sriram MG, Hersh WR (2006). "Using citation data to improve retrieval from MEDLINE". J Am Med Inform Assoc 13 (1): 96–105. DOI:10.1197/jamia.M1909. PMID 16221938. Research Blogging.

- ↑ Del Mar CB, Silagy CA, Glasziou PP, Weller D, Spinks AB, Bernath V et al. (2001). "Feasibility of an evidence-based literature search service for general practitioners.". Med J Aust 175 (3): 134-7. PMID 11548078.

In addition:

- Berthier Ribeiro-Neto; Ricardo Baeza-Yates; Ribeiro, Berthier de Araújo Neto (2009). Modern information retrieval. Boston: Addison-Wesley. ISBN 0-321-41691-0.

- Shortliffe, Edward Hance; Cimino, James D. (2006). Biomedical informatics: computer applications in health care and biomedicine. Berlin: Springer. ISBN 0-387-28986-0.

- Pages with reference errors

- Pages using ISBN magic links

- Pages using PMID magic links

- CZ Live

- Library and Information Science Workgroup

- Computers Workgroup

- Health Sciences Workgroup

- Articles written in British English

- All Content

- Library and Information Science Content

- Computers Content

- Health Sciences Content