Cryptography: Difference between revisions

imported>Pat Palmer (→Encryption in computers: more to intro) |

mNo edit summary |

||

| (455 intermediate revisions by 14 users not shown) | |||

| Line 1: | Line 1: | ||

{{subpages}} | {{PropDel}}<br><br>{{subpages}} | ||

{{TOC|right}} | |||

The term '''cryptography''' comes from [[Greek language|Greek]] κρυπτός ''kryptós'' "hidden," and γράφειν ''gráfein'' "to write". In the simplest case, the sender hides (encrypts) a message (plaintext) by converting it to an unreadable jumble of apparently random symbols (ciphertext). The process involves a [[cryptographic key | key]], a secret value that controls some of the operations. The intended receiver knows the key, so he can recover the original text (decrypt the message). Someone who intercepts the message sees only apparently random symbols; if the system performs as designed, then without the key an eavesdropper cannot read messages. | |||

Various techniques for obscuring messages have been in use by the military, by spies, and by diplomats for several millennia and in commerce at least since the Renaissance; see [[History of cryptography]] for details. With the spread of computers and electronic communication systems in recent decades, cryptography has become much more broadly important. | |||

Banks use cryptography to identify their customers for [[Automatic Teller Machine]] (ATM) transactions and to secure messages between the ATM and the bank's computers. Satellite TV companies use it to control who can access various channels. Companies use it to protect proprietary data. Internet protocols use it to provide various security services; see [[#Hybrid_cryptosystems | below]] for details. Cryptography can make email unreadable except by the intended recipients, or protect data on a laptop computer so that a thief cannot get confidential files. Even in the military, where cryptography has been important since the time of [[Caesar_cipher | Julius Caesar]], the range of uses is growing as new computing and communication systems come into play. | |||

With those changes comes a shift in emphasis. Cryptography is, of course, still used to provide secrecy. However, in many cryptographic applications, the issue is authentication rather than secrecy. The [[Personal identification number]] (PIN) for an ATM card is a secret, but it is not used as a key to hide the transaction; its purpose is to prove that it is the customer at the machine, not someone with a forged or stolen card. The techniques used for this are somewhat different than those for secrecy, and the techniques for authenticating a person are different from those for authenticating data — for example checking that a message has been received accurately or that a contract has not been altered. However, all these fall in the domain of cryptography. See [[Information_security#Information_transfer | information security]] for the different types of authentication, and [[#Cryptographic_hash_algorithms | hashes]] and [[#Public key systems | public key systems]] below for techniques used to provide them. | |||

Over the past few decades, cryptography has emerged as an academic discipline. The seminal paper was [[Claude Shannon]]'s 1949 "Communication Theory of Secrecy Systems"<ref>{{cite paper | |||

| author = C. E. Shannon | |||

| title = Communication Theory of Secrecy Systems | |||

| journal = Bell Systems Technical Journal | |||

| volume = 28 | |||

| date = 1949 | |||

| pages = pp.656-715 | |||

| url = http://netlab.cs.ucla.edu/wiki/files/shannon1949.pdf }}</ref>. | |||

Today there are journals, conferences, courses, textbooks, a professional association and a great deal of online material; see our [[Cryptography/Bibliography|bibliography]] and [[Cryptography/Bibliography|external links page]] for details. | |||

In roughly the same period, cryptography has become important in a number of political and legal controversies. Cryptography can be an important tool for personal privacy and freedom of speech, but it can also be used by criminals or terrorists. Should there be legal restrictions? Cryptography can attempt to protect things like e-books or movies from unauthorised access; what should the law say about those uses? Such questions are taken up [[#Legal and political issues|below]] and in more detail in a [[politics of cryptography]] article. | |||

Up to the early 20th century, cryptography was chiefly concerned with [[language|linguistic]] patterns. Since then the emphasis has shifted, and cryptography now makes extensive use of mathematics, primarily [[information theory]], [[Computational complexity theory|computational complexity]], [[abstract algebra]], and [[number theory]]. However, cryptography is not ''just'' a branch of mathematics. It might also be considered a branch of [[information security]] or of [[engineering]]. | |||

As well as being aware of cryptographic history and techniques, and of [[cryptanalysis|cryptanalytic]] methods, cryptographers must also carefully consider probable future developments. For instance, the effects of [[Moore's Law]] on the speed of [[brute force attack]]s must be taken into account when specifying [[cryptographic key#key length|key length]]s, and the potential effects of [[quantum computing]] are already being considered. [[Quantum cryptography]] is an active research area. | |||

== Cryptography is difficult == | |||

Cryptography, and more generally [[information security]], is difficult to do well. For one thing, it is inherently hard to design a system that resists efforts by an adversary to compromise it, considering that the opponent may be intelligent and motivated, will look for attacks that the designer did not anticipate, and may have large resources. | |||

To be secure, '''the system must resist all attacks'''; to break it, '''the attacker need only find one''' effective attack. Moreover, new attacks may be discovered and old ones may be improved or may benefit from new technology, such as faster computers or larger storage devices, but there is no way for attacks to become weaker or a system stronger over time. Schneier calls this "the cryptographer's adage: attacks always get better, they never get worse."<ref>{{citation | |||

| author = Bruce Schneier | |||

| title = Another New AES Attack | |||

| date = July 2009 | |||

| url = http://www.schneier.com/blog/archives/2009/07/another_new_aes.html | |||

}}</ref> | |||

Also, neither the user nor the system designer gets feedback on problems. If your word processor fails or your bank's web site goes down, you see the results and are quite likely to complain to the supplier. If your cryptosystem fails, you may not know. If your bank's cryptosystem fails, they may not know, and may not tell you if they do. If a serious attacker — a criminal breaking into a bank, a government running a monitoring program, an enemy in war, or any other — breaks a cryptosystem, he will certainly not tell the users of that system. If the users become aware of the break, then they will change their system, so it is very much in the attacker's interest to keep the break secret. In a famous example, the British [[ULTRA]] project read many German ciphers through most of World War II, and the Germans never realised it. | |||

Cryptographers routinely publish details of their designs and invite attacks. In accordance with [[Kerckhoffs' Principle]], a cryptosystem cannot be considered secure unless it remains safe even when the attacker knows all details except the key in use. A published design that withstands analysis is a candidate for trust, but '''no unpublished design can be considered trustworthy'''. Without publication and analysis, there is no basis for trust. Of course "published" has a special meaning in some situations. Someone in a major government cryptographic agency need not make a design public to have it analysed; he need only ask the cryptanalysts down the hall to have a look. | |||

Having a design publicly broken might be a bit embarrassing for the designer, but he can console himself that he is in good company; breaks routinely happen. Even the [[NSA]] can get it wrong; [[Matt Blaze]] found a flaw | |||

<ref>{{citation | |||

| title = Protocol failure in the escrowed encryption standard | |||

| url = http://portal.acm.org/citation.cfm?id=191193 | |||

| author = Matt Blaze | |||

| date = 1994}}</ref> | |||

in their [[Clipper chip]] within weeks of the design being de-classified. Other large organisations can too: Deutsche Telekom's [[MAGENTA (cipher)|Magenta]] cipher was broken<ref>{{citation | |||

| author = Eli Biham, Alex Biryukov, Niels Ferguson, Lars Knudsen, Bruce Schneier and Adi Shamir | |||

| title = Cryptanalysis of Magenta | |||

| conference = Second AES candidate conference | |||

| date = April 1999 | |||

| url = http://www.schneier.com/paper-magenta.pdf | |||

}}</ref> | |||

by a team that included [[Bruce Schneier]] within hours of being first made public at an [[AES candidate]]s' conference. Nor are the experts immune — they may find flaws in other people's ciphers but that does not mean their designs are necessarily safe. Blaze and Schneier designed a cipher called MacGuffin<ref>{{citation | |||

| title = The MacGuffin Block Cipher Algorithm | |||

| author = Matt Blaze and Bruce Schneier | |||

| date = 1995 | |||

| url = http://www.schneier.com/paper-macguffin.html | |||

}}</ref> | |||

that was broken<ref>{{citation | |||

| title = Cryptanalysis of McGuffin | |||

| author = Vincent Rijmen & Bart Preneel | |||

| date = 1995 | |||

}}</ref> | |||

before the end of the conference they presented it at. | |||

In any case, having a design broken — even broken by (horrors!) some unknown graduate student rather than a famous expert — is far less embarrassing than having a deployed system fall to a malicious attacker. At least when both design and attacks are in public research literature, the designer can either fix any problems that are found or discard one approach and try something different. | |||

The hard part of security system design is not usually the cryptographic techniques that are used in the system. Designing a good cryptographic primitive — a [[block cipher]], [[stream cipher]] or [[cryptographic hash]] — is indeed a tricky business, but for most applications designing new primitives is unnecessary. Good primitives are readily available; see the linked articles. The hard parts are fitting them together into systems and managing those systems to actually achieve [[information security |security goals]]. Schneier's preface to ''Secrets and Lies'' | |||

<ref name="secretslies">{{citation | |||

| title = Secrets & Lies: Digital Security in a Networked World | |||

| author = Bruce Schneier | |||

| url = http://www.schneier.com/book-sandl.html | |||

| date = 2000 | |||

| isbn = 0-471-25311-1 | |||

}}</ref> discusses this in some detail. His summary: | |||

{{quotation|If you think technology can solve your security problems, then you don't understand the problems and you don't understand the technology.<ref name="secretslies" />}} | |||

For links to several papers on the difficulties of cryptography, see our [[Cryptography/Bibliography#Difficulties_of_cryptography|bibliography]]. | |||

Then there is the optimism of programmers. As for databases and real-time programming, cryptography looks deceptively simple. The basic ideas are indeed simple and almost any programmer can fairly easily implement something that handles straightforward cases. However, as in the other fields, there are also some quite tricky aspects to the problems and anyone who tackles the hard cases without ''both'' some study of relevant theory ''and'' considerable practical experience is ''almost certain to get it wrong''. This is demonstrated far too often. | |||

For example, companies that implement their own cryptography as part of a product often end up with something that is easily broken. Examples include the addition of encryption to products like [[Microsoft Office]] | |||

<ref>{{cite paper|author=Hongjun Wu|title=The Misuse of RC4 in Microsoft Word and Excel|url=http://eprint.iacr.org/2005/007}}</ref>, | |||

Netscape | |||

<ref>{{cite paper | title=Randomness and the Netscape Browser: How secure is the World Wide Web? | date=January 1996 | journal=Dr. Dobb's Journal | author = Ian Goldberg and David Wagner | url=http://www.cs.berkeley.edu/~daw/papers/ddj-netscape.html}}</ref>, | |||

[[Adobe]]'s [[Portable Document Format]] (PDF) | |||

<ref>{{cite paper | |||

|author=David Touretsky | |||

|title= Gallery of Adobe Remedies | |||

| url= http://www-2.cs.cmu.edu/~dst/Adobe/Gallery/ | |||

}}</ref>, | |||

and many others. Generally, such problems are fixed in later releases. These are major companies and both programmers and managers on their product teams are presumably competent, but they ''routinely'' get the cryptography wrong. | |||

Even when they use standardised cryptographic protocols, they may still mess up the implementation and create large weaknesses. For example, Microsoft's first version of [[PPTP]] was vulnerable to a simple attack <ref>{{citation | |||

| author = Bruce Schneier and Mudge | |||

| title = Cryptanalysis of Microsoft's Point-to-Point Tunneling Protocol (PPTP) | |||

| publisher = ACM Press | |||

|url = http://www.schneier.com/pptp.html | |||

}}</ref> because of an [[Stream_cipher#Reusing_pseudorandom_material|elementary error]] in implementation. | |||

There are also failures in products where encryption is central to the design. Almost every company or standards body that designs a cryptosystem in secret, ignoring [[Kerckhoffs' Principle]], produces something that is easily broken. Examples include the [[Contents Scrambling System]] (CSS) encryption on [[DVD]]s, | |||

the [[WEP]] encryption in wireless networking, | |||

<ref>{{cite paper|author=Nikita Borisov, Ian Goldberg, and David Wagner|title=Security of the WEP algorithm|url=http://www.isaac.cs.berkeley.edu/isaac/wep-faq.html}}</ref> | |||

and the [[A5 (cipher)|A5]] encryption in [[GSM]] cell phones | |||

<ref>{{cite paper | |||

| author=Greg Rose | |||

| title = A precis of the new attacks on GSM encyption | |||

| url = http://www.qualcomm.com.au/PublicationsDocs/GSM_Attacks.pdf}}</ref>. | |||

Such problems are much harder to fix if the flawed designs are included in standards and/or have widely deployed hardware implementations; updating those is much more difficult than releasing a new software version. | |||

Beyond the real difficulties in implementing real products are some systems that ''both'' get the cryptography horribly wrong ''and'' make extravagant marketing claims. These are often referred to as [[Snake (animal) oil (cryptography) | snake oil]], | |||

==Principles and terms == | |||

'''Cryptography''' proper is the study of methods of '''encryption''' and '''decryption'''. [[Cryptanalysis]] or "codebreaking" is the study of how to break into an encrypted message without possession of the key. Methods of defeating cryptosystems have a long history and an extensive literature. Anyone designing or deploying a cryptosystem must take cryptanalytic results into account. | |||

Cryptology ("the study of secrets", from the Greek) is the more general term encompassing both cryptography and cryptanalysis. | |||

"'''Crypto'''" is sometimes used as a short form for any of the above. | |||

===Codes versus ciphers=== | |||

In common usage, the term "[[code (cryptography)|code]]" is often used to mean any method of encryption or meaning-concealment. In cryptography, however, '''code''' is more specific, meaning a linguistic procedure which replaces a unit of plain text with a code word or code phrase. For example, "apple pie" might replace "attack at dawn". Each code word or code phrase carries a specific meaning. | |||

A [[cipher]] (or ''cypher'') is a system of [[algorithm]]s for encryption and decryption. '''Ciphers''' operate at a lower level than codes, using a mathematical operation to convert understandable '''plaintext''' into unintelligible '''ciphertext'''. The meaning of the material is irrelevant; a cipher just manipulates letters or bits, or groups of those. A cipher takes as input a key and plaintext, and produces ciphertext as output. For decryption, the process is reversed to turn ciphertext back into plaintext. | |||

Ciphertext should bear no resemblance to the original message. Ideally, it should be indistinguishable from a random string of symbols. Any non-random properties may provide an opening for a skilled cryptanalyst. | |||

The exact operation of a cipher is controlled by a ''[[key (cryptography)|key]]'', which is a secret parameter for the cipher algorithm. The key may be different every day, or even different for every message. By contrast, the operation of a code is controlled by a code book which lists all the codes; these are harder to change. | |||

Codes are not generally practical for lengthy or complex communications, and are difficult to do in software, as they are as much linguistic as mathematical problems. If the only times the messages need to name are "dawn", "noon", "dusk" and "midnight", then a code is fine; usable code words might be "John", "George", "Paul" and "Ringo". However, if messages must be able to specify things like 11:37 AM, a code is inconvenient. Also if a code is used many times, an enemy is quite likely to work out that "John" means "dawn" or whatever; there is no long-term security. | |||

An important difference is that changing a code requires retraining users or creating and (securely!) delivering new code books, but changing a cipher key is much easier. If an enemy gets a copy of your codebook (whether or not you are aware of this!), then the code becomes worthless until you replace those books. By contrast, having an enemy get one of your cipher machines or learn the algorithm for a software cipher should do no harm at all — see [[Kerckhoffs' Principle]]. If an enemy learns the key, that defeats a cipher, but keys are easily changed; in fact, the procedures for any cipher usage normally include some method for routinely changing the key. | |||

For the above reasons, ciphers are generally preferred in practice. Nevertheless, there are niches where codes are quite useful. A small number of codes can represent a set of operations known to sender and receiver. "Climb Mount Niikata" was a final order for the Japanese mobile striking fleet to attack Pearl Harbor, while "visit Aunt Shirley" could order a terrorist to trigger a chemical weapon at a particular place. If the codes are not re-used or foolishly chosen (e,g. using "flyboy" for an Air Force officer) and do not have a pattern (e.g. using "Lancelot" and "Galahad" for senior officers, making it easy for an enemy to guess "Arthur" or "Gawain"), then there is no information to help a cryptanalyst and the system is ''extremely'' secure. | |||

Codes may also be combined with ciphers. Then if an enemy breaks a cipher, much of what he gets will be code words. Unless he either already knows the code words or has enough broken messages to search for codeword re-use, the code may defeat him even if the cipher did not. For example, if the Americans had intercepted and decrypted a message saying "Climb Mount Niikata" just before Pearl Harbor, they would likely not have known its meaning. | |||

There are historical examples of enciphered codes or [[encicode]]s. There are also methods of embedding code phrases into apparently innocent messages; see [[#Steganography|steganography]] below. | |||

In military systems, a fairly elaborate system of [[compartmented control system#Code words and nicknames|code word]]s may be used. | |||

=== Keying === | |||

What a cipher attempts to do is to replace a difficult problem, keeping messages secret, with a much more tractable one, managing a set of keys. Of course this makes the keys critically important. Keys need to be '''large enough''' and '''highly random'''; those two properties together make them effectively impossible to guess or to find with a [[brute force]] search. See [[cryptographic key]] for discussion of the various types of key and their properties, and [[#Random numbers| random numbers]] below for techniques used to generate good ones. | |||

In the | [[Kerckhoffs' Principle]] is that no system should be considered secure unless it can resist an attacker who knows ''all its details except the key''. The most fearsome attacker is one with strong motivation, large resources, and few scruples; such an attacker ''will'' learn all the other details sooner or later. To defend against him takes a system whose security depends ''only'' on keeping the keys secret. | ||

More generally, managing relatively small keys — creating good ones, keeping them secret, ensuring that the right people have them, and changing them from time to time — is not remarkably easy, but it is at least a reasonable proposition in many cases. See [[key management]] for the techniques. | |||

However, in almost all cases, ''it is a bad idea to rely on a system that requires large things to be kept secret''. [[Security through obscurity]] — designing a system that depends for its security on keeping its inner workings secret — is not usually a good approach. Nor, in most cases, are a [[one-time pad]] which needs a key as large as the whole set of messages it will protect, or a [[#Codes_versus_ciphers|code]] which is only secure as long as the enemy does not have the codebook. There are niches where each of those techniques can be used, but managing large secrets is always problematic and often entirely impractical. In many cases, it is no easier than the original difficult problem, keeping the messages secret. | |||

== Basic mechanisms == | |||

In describing cryptographic systems, the players are traditionally called [[Alice and Bob]], or just A and B. We use these names throughout the discussion below. | |||

=== Secret key systems === | |||

Until the 1970s, all (publicly known) cryptosystems used '''secret key''' or [[symmetric key cryptography]] methods. In such a system, there is only one key for a message; that key can be used either to encrypt or decrypt the message, and it must be kept secret. Both the sender and receiver must have the key, and third parties (potential intruders) must be prevented from obtaining the key. Symmetric key encryption may also be called ''traditional'', ''shared-secret'', ''secret-key'', or ''conventional'' encryption. | |||

Historically, [[cipher]]s worked at the level of letters or groups of letters; see [[history of cryptography]] for details. Attacks on them used techniques based largely on linguistic analysis, such as frequency counting; see [[cryptanalysis]]. <!-- This should become a more specific link when there is something better to link to --> | |||

==== Types of modern symmetric cipher ==== | |||

On computers, there are two main types of symmetric encryption algorithm: | |||

A [[block cipher]] breaks the data up into fixed-size blocks and encrypts each block under control of the key. Since the message length will rarely be an integer number of blocks, there will usually need to be some form of "padding" to make the final block long enough. The block cipher itself defines how a single block is encrypted; [[Block cipher modes of operation | modes of operation]] specify how these operations are combined to achieve some larger goal. | |||

A [[stream cipher]] encrypts a stream of input data by combining it with a [[random number | pseudo-random]] stream of data; the pseudo-random stream is generated under control of the encryption key. | |||

To a great extent, the two are interchangeable; almost any task that needs a symmetric cipher can be done by either. In particular, any block cipher can be used as stream cipher in some [[Block cipher modes of operation | modes of operation]]. In general, stream ciphers are faster than block ciphers, and some of them are very easy to implement in hardware; this makes them attractive for dedicated devices. However, which one is used in a particular application depends largely on the type of data to be encrypted. Oversimplifying slightly, stream ciphers work well for streams of data while block ciphers work well for chunks of data. Stream ciphers are the usual technique to encrypt a communication channel, for example in military radio or in cell phones, or to encrypt network traffic at the level of physical links. Block ciphers are usual for things like encrypting disk blocks, or network traffic at the packet level (see [[IPsec]]), or email messages ([[PGP]]). | |||

Another method, usable manually or on a computer, is a [[one-time pad]]. This works much like a stream cipher, but it does not need to generate a pseudo-random stream because its key is a ''truly random stream as long as the message''. This is the only known cipher which is provably secure (provided the key is truly random and no part of it is ever re-used), but it is impractical for most applications because managing such keys is too difficult. | |||

==== Key management ==== | |||

More generally, [[key management]] is a problem for any secret key system. | |||

* It is ''critically'' important to '''protect keys''' from unauthorised access; if an enemy obtains the key, then he or she can read all messages ever sent with that key. | |||

* It is necessary to '''change keys''' periodically, both to limit the damage if an attacker does get a key and to prevent various [[cryptanalysis|attacks]] which become possible if the enemy can collect a large sample of data encrypted with a single key. | |||

* It is necessary to '''communicate keys'''; without a copy of the identical key, the intended receiver cannot decrypt the message. | |||

* It is sometimes necessary to '''revoke keys''', for example if a key is compromised or someone leaves the organisation. | |||

Managing all of these simultaneously is an inherently difficult problem. Moreover, the problem grows quadratically if there are many users. If ''N'' users must all be able to communicate with each other securely, then there are ''N''(''N''−1)/2 possible connections, each of which needs its own key. For large ''N'' this becomes quite unmanageable. | |||

One problem is where, and how, to safely store the key. In a manual system, you need a key that is long and hard to guess because keys that are short or guessable provide little security. However, such keys are hard to remember and if the user writes them down, then you have to worry about someone looking over his shoulder, or breaking in and copying the key, or the writing making an impression on the next page of a pad, and so on. | |||

On a computer, keys must be protected so that enemies cannot obtain them. Simply storing the key unencrypted in a file or database is a poor strategy. A better method is to encrypt the key and store it in a file that is protected by the file system; this way, only authorized users of the system should be able to read the file. But then, where should one store the key used to encrypt the secret key? It becomes a recursive problem. Also, what about an attacker who can defeat the file system protection? If the key is stored encrypted but you have a program that decrypts and uses it, can an attacker obtain the key via a memory dump or a debugging tool? If a network is involved, can an attacker get keys by intercepting network packets? Can an attacker put a keystroke logger on the machine? If so, he can get everything you type, possibly including keys or passwords. | |||

For access control, a common technique is ''two factor authentication'', combining "something you have" (e.g. your ATM card) with "something you know" (e.g. the PIN). An account number or other identifier stored on the card is combined with the PIN, using a cryptographic hash, and the hash checked before access is granted. In some systems, a third factor is used, a random challenge; this prevents an enemy from reading the hash from one transaction and using it to perform a different transaction. | |||

Communicating keys is an even harder problem. With secret key encryption alone, it would not be possible to open up a new secure connection on the Internet, because there would be no safe way initially to transmit the shared key to the other end of the connection without intruders being able to intercept it. A government or major corporation might send someone with a briefcase handcuffed to his wrist, but for many applications this is impractical. | |||

Another problem arises when keys are compromised. Suppose an intruder has broken into Alice's system and it is possible he now has all the keys she knows, or suppose Alice leaves the company to work for a competitor. In either case, all Alice's keys must be replaced; this takes many changes on her system and one change each on every system she communicates with, and all the communication must be done without using any of the compromised keys. | |||

Various techniques can be used to address these difficulties. A centralised key-dispensing server, such as the [[Kerberos]] system is one method. Each user then needs to manage only one key, the one for access to that server; all other keys are provided at need by the server. | |||

The development of [[#public key | public key]] techniques, described in the next section, allows simpler solutions. | |||

=== Public key systems=== | |||

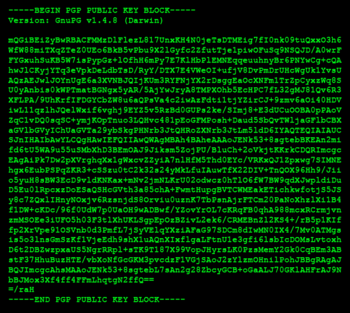

{{Image|GPG public key.png|right|350px|A [[public key]] for [[encryption]] via [[GnuPG]].}} | |||

'''Public key''' or [[asymmetric key cryptography]] was first proposed, in the open literature, in 1976 by [[Whitfield Diffie]] and [[Martin Hellman]].<ref>{{citation | |||

| first1 = Whitfield | last1 = Diffie | first2=Martin | lastt2 = Hellman | |||

| title = Multi-user cryptographic techniques | |||

| journal = AFIPS Proceedings 4 | |||

| volume = 5 | |||

| pages = 109-112 | |||

| date = June 8, 1976}}</ref>. The historian [[David Kahn]] described it as "the most revolutionary new concept in the field since polyalphabetic substitution emerged in the Renaissance" <ref>David Kahn, "Cryptology Goes Public", 58 ''Foreign Affairs'' 141, 151 (fall 1979), p. 153</ref>. There are two reasons why public key cryptography is so important. One is that it solves the key management problem described in the preceding section; the other is that public key techniques are the basis for [[#digital signature|digital signatures]]. | |||

In a public key system, keys are created in matched pairs, such that when one of a pair is used to encrypt, ''the other must be used to decrypt''. The system is designed so that calculation of one key from knowledge of the other is computationally infeasible, even though they are necessarily related. Keys are generated secretly, in interrelated pairs. One key from a pair becomes the '''public key''' and can be published. The other is the '''private key''' and is kept secret, never leaving the user's computer. | |||

In many applications, public keys are widely published — on the net, in the phonebook, on business cards, on key server computers which provide an index of public keys. However, it is also possible to use public key technology while restricting access to public keys; some military systems do this, for example. The point of public keys is not that they ''must'' be made public, but that they ''could'' be; the security of the system does not depend on keeping them secret. | |||

One big payoff is that two users (traditionally, A and B or [[Alice and Bob]]) need not share a secret key in order to communicate securely. When used for [[communications security#content confidentiality|content confidentiality]], the ''public key'' is typically used for encryption, while the ''private key'' is used for decryption. If Alice has (a trustworthy, verified copy of) Bob's public key, then she can encrypt with that and know that only Bob can read the message since only he has the matching private key. He can reply securely using her public key. '''This solves the key management problem'''. The difficult question of how to communicate secret keys securely does not need to even be asked; the private keys are never communicated and there is no requirement that communication of public keys be done securely. | |||

Moreover, key management on a single system becomes much easier. In a system based on secret keys, if Alice communicates with ''N'' people, her system must manage ''N'' secret keys all of which change periodically, all of which must sometimes be communicated, and each of which must be kept secret from everyone except the one person it is used with. For a public key system, the main concern is managing her own private key; that generally need not change and it is never communicated to anyone. Of course, she must also manage the public keys for her correspondents. In some ways, this is easier; they are already public and need not be kept secret. However, it is absolutely necessary to '''authenticate each public key'''. Consider a philandering husband sending passionate messages to his mistress. If the wife creates a public key in the mistress' name and he does not check the key's origins before using it to encrypt messages, he may get himself in deep trouble. | |||

Public-key encryption is slower than conventional symmetric encryption so it is common to use a public key algorithm for key management but a faster symmetric algorithm for the main data encryption. Such systems are described in more detail below; see [[#hybrid cryptosystems|hybrid cryptosystems]]. | |||

The other big payoff is that, given a public key cryptosystem, [[#digital signature|digital signatures]] are a straightforward application. The basic principle is that if Alice uses her private key to encrypt some known data then anyone can decrypt with her public key and, if they get the right data, they know (assuming the system is secure and her private key unknown to others) that it was her who did the encryption. In effect, she can use her private key to sign a document. The details are somewhat more complex and are dealt with in a [[#digital signature|later section]]. | |||

Many different asymmetric techniques have been proposed and some have been shown to be vulnerable to some forms of [[cryptanalysis]]; see the [[public key]] article for details. The most widely used public techniques today are the [[Diffie-Hellman]] key agreement protocol and the [[RSA public-key system]]<ref name=RSA>{{citation | |||

| first1 = Ronald L. | last1 = Rivest | first2 = Adi |last2= Shamir | first3 = Len | last3 = Adleman | |||

| url = http://theory.lcs.mit.edu/~rivest/rsapaper.pdf | |||

| title = A Method for Obtaining Digital Signatures and Public-Key Cryptosystems}}</ref>. Techniques based on [[elliptic curve]]s are also used. The security of each of these techniques depends on the difficulty of some mathematical problem — [[integer factorisation]] for RSA, [[discrete logarithm]] for Diffie-Hellman, and so on. These problems are generally thought to be hard; no general solution methods efficient enough to provide reasonable attacks are known. However, there is no proof that such methods do not exist. If an efficient solution for one of these problems were found, it would break the corresponding cryptosystem. Also, in some cases there are efficient methods for special classes of the problem, so the cryptosystem must avoid these cases. For example, [[Attacks_on_RSA#Weiner_attack|Weiner's attack]] on RSA works if the secret exponent is small enough, so any RSA-based system should be designed to choose larger exponents. | |||

In 1997, it finally became publicly known that asymmetric cryptography had been invented by James H. Ellis at GCHQ, a [[United Kingdom|British]] intelligence organization, in the early 1970s, and that both the Diffie-Hellman and RSA algorithms had been previously developed | |||

<ref>{{citation | |||

| url =http://www.cesg.gov.uk/publications/historical.shtml | |||

| author = Clifford Cocks | |||

| title = A Note on 'Non-Secret Encryption' | |||

| journal = CESG Research Report | |||

| date = November 1973 | |||

}}</ref>. | |||

<ref>{{citation | |||

| url =http://www.cesg.gov.uk/publications/historical.shtml | |||

| author = Malcolm Williamson | |||

| title = Non-Secret Encryption Using a Finite Field | |||

| journal = CESG Research Report | |||

| date = 1974 | |||

}}</ref>. | |||

=== Cryptographic hash algorithms === | |||

A [[cryptographic hash]] or '''message digest''' algorithm takes an input of arbitrary size and produces a fixed-size digest, a sort of fingerprint of the input document. Some of the techniques are the same as those used in other cryptography but the goal is quite different. Where ciphers (whether symmetric or asymmetric) provide secrecy, hashes provide authentication. | |||

Using a hash for [[information security#integrity|data integrity protection]] is straightforward. If Alice hashes the text of a message and appends the hash to the message when she sends it to Bob, then Bob can verify that he got the correct message. He computes a hash from the received message text and compares that to the hash Alice sent. If they compare equal, then he knows (with overwhelming probability, though not with absolute certainty) that the message was received exactly as Alice sent it. Exactly the same method works to ensure that a document extracted from an archive, or a file downloaded from a software distribution site, is as it should be. | |||

However, the simple technique above is useless against an adversary who intentionally changes the data. The enemy simply calculates a new hash for his changed version and stores or transmits that instead of the original hash. '''To resist an adversary takes a keyed hash''', a [[hashed message authentication code]] or HMAC. Sender and receiver share a secret key; the sender hashes using both the key and the document data, and the receiver verifies using both. Lacking the key, the enemy cannot alter the document undetected. | |||

If Alice uses an HMAC and that verifies correctly, then Bob knows ''both'' that the received data is correct ''and'' that whoever sent it knew the secret key. If the public key system and the hash are secure, and only Alice knows that key, then he knows Alice was the sender. An HMAC provides [[information security#source authentication|source authentication]] as well as data authentication. | |||

''See also [[One-way encryption]].'' | |||

=== Heading text === | |||

=== Random numbers === | |||

Many cryptographic operations require random numbers, and the design of strong [[random number generator]]s is considered part of cryptography. It is not enough that the outputs have good statistical properties; the generator must also withstand efforts by an adversary to compromise it. Many cryptographic systems, including some otherwise quite good ones, have been broken because a poor quality random number generator was the weak link that gave a [[Cryptanalysis|cryptanalyst]] an opening. | |||

For example generating [[RSA algorithm|RSA]] keys requires large random primes, [[Diffie-Hellman]] key agreement requires that each system provides a random component, and in a [[challenge-response protocol]] the challenges should be random. Many protocols use '''session keys''' for parts of the communication, for example [[PGP]] uses a different key for each message and [[IPsec]] changes keys periodically; these keys should be random. In any of these applications, and many more, using poor quality random numbers greatly weakens the system. | |||

The requirements for random numbers that resist an adversary — someone who wants to cheat at a casino or read an encrypted message — are much stricter than those for non-adversarial applications such as a simulation. The standard reference is the "Randomness Requirements for Security" RFC.<ref name=RFC4086>{{citation | |||

| id = RFC 4086; IETF Best Current Practice 106 | |||

| title = Randomness Requirements for Security | |||

| first1 = D. 3rd | last1 = Eastlake | first2 = J. |last2 = Schiller | first3 = S. | last3 = Crocker | |||

| date = June 2005 | |||

| url = http://www.ietf.org/rfc/rfc4086.txt}}</ref> | |||

=== One-way encryption === | |||

''See [[One-way encryption]].'' | |||

=== Steganography === | |||

[[Steganography]] is the study of techniques for hiding a secret message within an apparently innocent message. If this is done well, it can be extremely difficult to detect. | |||

Generally, the best place for steganographic hiding is in a large chunk of data with an inherent random noise component — photos, audio, or especially video. For example, given an image with one megapixel and three bytes for different colours in each pixel, one could hide three megabits of message in the least significant bits of each byte, with reasonable hope that the change to the image would be unnoticeable. Encrypting the data before hiding it steganographically is a common practice; because encrypted data appears quite random, this can make steganography very difficult to detect. In our example, three megabits of encrypted data would look very much like the random noise which one might expect in the least significant bits of a photographic image that had not been tampered with. | |||

One application of steganography is to place a [[digital watermark]] in a media work; this might allow the originator to prove that someone had copied the work, or in some cases to trace which customer had allowed copying. | |||

However, a message can be concealed in almost anything. In text, non-printing characters can be used — for example a space added before a line break does not appear on a reader's screen and an extra space after a period might not be noticed — or the text itself may include hidden messages. For example, in [[Neal Stephenson's]] novel [[Cryptonomicon]], one message is [[#Codes_versus_ciphers|coded]] as an email joke; a joke about Imelda Marcos carries one meaning, while one about Ferdinand Marcos would carry another. | |||

Often indirect methods are used. If Alice wants to send a disguised message to Bob, she need not directly send him a covering message. That would tell an enemy doing traffic analysis at least that A and B were communicating. Instead, she can place a classified ad containing a code phrase in the local newspaper, or put a photo or video with a steganographically hidden message on a web site. This makes detection quite difficult, though it is still possible for an enemy that monitors everything, or for one that already suspects something and is closely monitoring both Alice and Bob. | |||

A related technique is the [[covert channel]], where the hidden message is not embedded within the visible message, but rather carried by some other aspect of the communication. For example, one might vary the time between characters of a legitimate message in such a way that the time intervals encoded a covert message. | |||

[[ | |||

== Combination mechanisms == | |||

The basic techniques described above can be combined in many ways. Some common combinations are described here. | |||

=== | ===Digital signatures=== | ||

Two cryptographic techniques are used together to produce a [[digital signature]], a [[#Cryptographic hash algorithms | hash]] and a [[#Public key systems | public key]] system. | |||

[[ | |||

Alice calculates a hash from the message, encrypts that hash with her private key, combines the encrypted hash with some identifying information to say who is signing the message, and appends the combination to the message as a signature. | |||

To verify the signature, Bob uses the identifying information to look up Alice's public key and checks signatures or certificates to verify the key. He uses that public key to decrypt the hash in the signature; this gives him the hash Alice calculated. He then hashes the received message body himself to get another hash value and compares the two hashes. If the two hash values are identical, then Bob knows with overwhelming probability that the document Alice signed and the document he received are identical. He also knows that whoever generated the signature had Alice's private key. If both the hash and the public key system used are secure, and no-one except Alice knows her private key, then the signatures are trustworthy. | |||

[[ | A digital signature has some of the desirable properties of an ordinary [[signature]]. It is easy for a user to produce, but difficult for anyone else to [[forgery|forge]]. The signature is permanently tied to the content of the message being signed, and to the identity of the signer. It cannot be copied from one document to another, or used with an altered document, since the different document would give a different hash. A miscreant cannot sign in someone else's name because he does not know the required private key. | ||

Any public key technique can provide digital signatures. The [[RSA algorithm]] is widely used, as is the US government standard [[Digital Signature Algorithm]] (DSA). | |||

Once you have digital signatures, a whole range of other applications can be built using them. Many software distributions are signed by the developers; users can check the signatures before installing. Some operating systems will not load a driver unless it has the right signature. On [[Usenet]], things like new group commands and [[NoCeM]]s [http://www.xs4all.nl/~rosalind/nocemreg/nocemreg.html] carry a signature. The digital equivalent of having a document notarised is to get a trusted party to sign a combination document — the original document plus identifying information for the notary, a time stamp, and perhaps other data. | |||

See also the next two sections, "Digital certificates" and "Public key infrastructure". | |||

The use of digital signatures raises legal issues. There is an online [http://dsls.rechten.uvt.nl/ survey] of relevant laws in various countries. | |||

=== | === Digital certificates === | ||

[[Digital certificate]]s are the digital analog of an identification document such as a driver's license, passport, or business license. Like those documents, they usually have expiration dates, and a means of verifying both the validity of the certificate and of the certificate issuer. Like those documents, they can sometimes be revoked. | |||

The technology for generating these is in principle straightforward; simply assemble the appropriate data, munge it into the appropriate format, and have the appropriate authority digitally sign it. In practice, it is often rather complex. | |||

=== Public key infrastructure === | |||

Practical use of asymmetric cryptography, on any sizable basis, requires a [[public key infrastructure]] (PKI). It is not enough to just have public key technology; there need to be procedures for signing things, verifying keys, revoking keys and so on. | |||

In | In typical PKI's, public keys are embedded in [[digital certificate]]s issued by a [[certification authority]]. In the event of compromise of the private key, the certification authority can revoke the key by adding it to a [[certificate revocation list]]. There is often a hierarchy of certificates, for example a school's certificate might be issued by a local school board which is certified by the state education department, that by the national education office, and that by the national government master key. | ||

An alternative non-hierarchical [[web of trust]] model is used in [[PGP]]. Any key can sign any other; digital certificates are not required. Alice might accept the school's key as valid because her friend Bob is a parent there and has signed the school's key. Or because the principal gave her a business card with his key on it and he has signed the school key. Or both. Or some other combination; Carol has signed Dave's key and he signed the school's. It becomes fairly tricky to decide whether that last one justifies accepting the school key, however. | |||

== | === Hybrid cryptosystems === | ||

Most real applications combine several of the above techniques into a [[hybrid cryptosystem]]. Public-key encryption is slower than conventional symmetric encryption, so use a symmetric algorithm for the bulk data encryption. On the other hand, public key techniques handle the key management problem well, and that is difficult with symmetric encryption alone, so use public key methods to manage keys. Neither symmetric nor public key methods are ideal for data authentication; use a hash for that. Many of the protocols also need cryptographic quality [[random number]]s. | |||

Examples abound, each using a somewhat different combination of methods to meet its particular application requirements. | |||

In [[Pretty Good Privacy]] ([[PGP]]) email encryption the sender generates a random key for the symmetric bulk encryption and uses public key techniques to securely deliver that key to the receiver. Hashes are used in generating digital signatures. | |||

In [[IPsec]] (Internet Protocol Security) public key techniques provide [[information security#source authentication|source authentication]] for the gateway computers which manage the tunnel. Keys are set up using the [[Diffie-Hellman]] key agreement protocol and the actual data packets are (generally) encrypted with a [[block cipher]] and authenticated with an [[HMAC]]. | |||

In [[Secure Sockets Layer]] (SSL) or the later version [[Transport Layer Security]] (TLS) which provides secure web browsing (http'''s'''), digital certificates are used for [[information security#source authentication|source authentication]] and connections are generally encrypted with a [[stream cipher]]. | |||

== Cryptographic hardware == | |||

Historically, many ciphers were done with pencil and paper but various mechanical and electronic devices were also used. See [[history of cryptography]] for details. Various machines were also used for [[cryptanalysis]], the most famous example being the British [[ULTRA]] project during the [[Second World War]] which made extensive use of mechanical and electronic devices in cracking German ciphers. | |||

Modern ciphers are generally algorithms which can run on any general purpose computer, though there are exceptions such as [[Stream cipher#Solitaire | Solitaire]] designed for manual use. However, ciphers are also often implemented in hardware; as a general rule, anything that can be implemented in software can also be done with an [[FPGA]] or a custom chip. This can produce considerable speedups or cost savings. | |||

The [[RSA algorithm]] requires arithmetic operations on quantities of 1024 or more bits. This can be done on a general-purpose computer, but special hardware can make it quite a bit faster. Such hardware is therefore a fairly common component of boards designed to accelerate [[SSL]]. | |||

Hardware encryption devices are used in a variety of applications. [[Block cipher]]s are often done in hardware; the [[Data Encryption Standard]] was originally intended to be implemented only in hardware. For the [[Advanced Encryption Standard]], there are a number of [[Advanced Encryption Standard#AES in hardware|AES chips]] on the market and Intel are adding AES instructions to their CPUs. Hardware implementations of [[stream cipher]]s are widely used to encrypt communication channels, for example in cell phones or military radio. The [[NSA]] have developed an encryption box for local area networks called [[TACLANE]]. | |||

When encryption is implemented in hardware, it is necessary to defend against [[Cryptanalysis#Side_channel_attacks | side channel attacks]], for example to prevent an enemy analysing or even manipulating the radio frequency output or the power usage of the device. | |||

== | Hardware can also be used to facilitate attacks on ciphers. [[Brute force attack]]s on [[cipher]]s work very well on parallel hardware; in effect you can make them as fast as you like if you have the budget. Many machines have been proposed, and several actually built, for attacking the [[Data Encryption Standard]] in this way; for details see the [[Data_Encryption_Standard#DES_history_and_controversy | DES article]]. | ||

== | A device called TWIRL <ref>{{cite paper | ||

| author=Adi Shamir & Eran Tromer | |||

| title=On the cost of factoring RSA-1024 | |||

| journal=RSA CryptoBytes | |||

| volume=6 | |||

| date=2003 | |||

| url=http://people.csail.mit.edu/tromer/ | |||

}}</ref> has been proposed for rapid [[integer factorisation|factoring]] of 1024-bit numbers; for details see [[attacks on RSA]]. | |||

== Legal and political issues == | |||

A number of legal and political issues arise in connection with cryptography. In particular, government regulations controlling the use or export of cryptography are passionately debated. For detailed discussion, see [[politics of cryptography]]. | |||

There were extensive debates over cryptography, sometimes called the "crypto wars", mainly in the 1990s. The main advocates of strong controls over cryptography were various governments, especially the US government. On the other side were many computer and Internet companies, a loose coalition of radicals called cypherpunks, and advocacy group groups such as the [[Electronic Frontier Foundation]]. One major issue was [[export controls]]; another was attempts such as the [[Clipper chip]] to enforce [[escrowed encryption]] or "government access to keys". A history of this fight is Steven Levy ''Crypto: How the Code rebels Beat the Government — Saving Privacy in the Digital Age'' | |||

<ref name="levybook">{{cite book| | |||

| | | author = Steven Levy | ||

| title = "Crypto: How the Code Rebels Beat the Government — Saving Privacy in the Digital Age | | title = "Crypto: How the Code Rebels Beat the Government — Saving Privacy in the Digital Age | ||

| publisher = | | publisher = Penguin | ||

| date = 2001 | | date = 2001 | ||

| id = ISBN 0-14-024432-8 | | id = ISBN 0-14-024432-8 | ||

| pages = 56 | | pages = 56 | ||

}}</ref>. | }}</ref> . "Code Rebels" in the title is almost synonymous with cypherpunks. | ||

Encryption used for [[Digital Rights Management]] also sets off passionate debates and raises legal issues. Should it be illegal for a user to defeat the encryption on a DVD? Or for a movie cartel to manipulate the market using encryption in an attempt to force Australians to pay higher prices because US DVDs will not play on their machines? | |||

The legal status of digital signatures can be an issue, and cryptographic techniques may affect the acceptability of computer data as evidence. Access to data can be an issue: can a warrant or a tax auditor force someone to decrypt data, or even to turn over the key? The British [[Regulation of Investigatory Powers Act]] includes such provisions. Is encrypting a laptop hard drive when traveling just a reasonable precaution, or is it reason for a border control officer to become suspicious? | |||

Two online surveys cover cryptography laws around the world, one for [http://rechten.uvt.nl/koops/cryptolaw/ usage and export restrictions] and one for [http://dsls.rechten.uvt.nl/ digital signatures]. | |||

==References== | ==References== | ||

{{reflist|2}}[[Category:Suggestion Bot Tag]] | |||

Latest revision as of 11:00, 3 August 2024

| This article may be deleted soon. | ||

|---|---|---|

The term cryptography comes from Greek κρυπτός kryptós "hidden," and γράφειν gráfein "to write". In the simplest case, the sender hides (encrypts) a message (plaintext) by converting it to an unreadable jumble of apparently random symbols (ciphertext). The process involves a key, a secret value that controls some of the operations. The intended receiver knows the key, so he can recover the original text (decrypt the message). Someone who intercepts the message sees only apparently random symbols; if the system performs as designed, then without the key an eavesdropper cannot read messages. Various techniques for obscuring messages have been in use by the military, by spies, and by diplomats for several millennia and in commerce at least since the Renaissance; see History of cryptography for details. With the spread of computers and electronic communication systems in recent decades, cryptography has become much more broadly important. Banks use cryptography to identify their customers for Automatic Teller Machine (ATM) transactions and to secure messages between the ATM and the bank's computers. Satellite TV companies use it to control who can access various channels. Companies use it to protect proprietary data. Internet protocols use it to provide various security services; see below for details. Cryptography can make email unreadable except by the intended recipients, or protect data on a laptop computer so that a thief cannot get confidential files. Even in the military, where cryptography has been important since the time of Julius Caesar, the range of uses is growing as new computing and communication systems come into play. With those changes comes a shift in emphasis. Cryptography is, of course, still used to provide secrecy. However, in many cryptographic applications, the issue is authentication rather than secrecy. The Personal identification number (PIN) for an ATM card is a secret, but it is not used as a key to hide the transaction; its purpose is to prove that it is the customer at the machine, not someone with a forged or stolen card. The techniques used for this are somewhat different than those for secrecy, and the techniques for authenticating a person are different from those for authenticating data — for example checking that a message has been received accurately or that a contract has not been altered. However, all these fall in the domain of cryptography. See information security for the different types of authentication, and hashes and public key systems below for techniques used to provide them. Over the past few decades, cryptography has emerged as an academic discipline. The seminal paper was Claude Shannon's 1949 "Communication Theory of Secrecy Systems"[1]. Today there are journals, conferences, courses, textbooks, a professional association and a great deal of online material; see our bibliography and external links page for details. In roughly the same period, cryptography has become important in a number of political and legal controversies. Cryptography can be an important tool for personal privacy and freedom of speech, but it can also be used by criminals or terrorists. Should there be legal restrictions? Cryptography can attempt to protect things like e-books or movies from unauthorised access; what should the law say about those uses? Such questions are taken up below and in more detail in a politics of cryptography article. Up to the early 20th century, cryptography was chiefly concerned with linguistic patterns. Since then the emphasis has shifted, and cryptography now makes extensive use of mathematics, primarily information theory, computational complexity, abstract algebra, and number theory. However, cryptography is not just a branch of mathematics. It might also be considered a branch of information security or of engineering. As well as being aware of cryptographic history and techniques, and of cryptanalytic methods, cryptographers must also carefully consider probable future developments. For instance, the effects of Moore's Law on the speed of brute force attacks must be taken into account when specifying key lengths, and the potential effects of quantum computing are already being considered. Quantum cryptography is an active research area. Cryptography is difficultCryptography, and more generally information security, is difficult to do well. For one thing, it is inherently hard to design a system that resists efforts by an adversary to compromise it, considering that the opponent may be intelligent and motivated, will look for attacks that the designer did not anticipate, and may have large resources. To be secure, the system must resist all attacks; to break it, the attacker need only find one effective attack. Moreover, new attacks may be discovered and old ones may be improved or may benefit from new technology, such as faster computers or larger storage devices, but there is no way for attacks to become weaker or a system stronger over time. Schneier calls this "the cryptographer's adage: attacks always get better, they never get worse."[2] Also, neither the user nor the system designer gets feedback on problems. If your word processor fails or your bank's web site goes down, you see the results and are quite likely to complain to the supplier. If your cryptosystem fails, you may not know. If your bank's cryptosystem fails, they may not know, and may not tell you if they do. If a serious attacker — a criminal breaking into a bank, a government running a monitoring program, an enemy in war, or any other — breaks a cryptosystem, he will certainly not tell the users of that system. If the users become aware of the break, then they will change their system, so it is very much in the attacker's interest to keep the break secret. In a famous example, the British ULTRA project read many German ciphers through most of World War II, and the Germans never realised it. Cryptographers routinely publish details of their designs and invite attacks. In accordance with Kerckhoffs' Principle, a cryptosystem cannot be considered secure unless it remains safe even when the attacker knows all details except the key in use. A published design that withstands analysis is a candidate for trust, but no unpublished design can be considered trustworthy. Without publication and analysis, there is no basis for trust. Of course "published" has a special meaning in some situations. Someone in a major government cryptographic agency need not make a design public to have it analysed; he need only ask the cryptanalysts down the hall to have a look. Having a design publicly broken might be a bit embarrassing for the designer, but he can console himself that he is in good company; breaks routinely happen. Even the NSA can get it wrong; Matt Blaze found a flaw [3] in their Clipper chip within weeks of the design being de-classified. Other large organisations can too: Deutsche Telekom's Magenta cipher was broken[4] by a team that included Bruce Schneier within hours of being first made public at an AES candidates' conference. Nor are the experts immune — they may find flaws in other people's ciphers but that does not mean their designs are necessarily safe. Blaze and Schneier designed a cipher called MacGuffin[5] that was broken[6] before the end of the conference they presented it at. In any case, having a design broken — even broken by (horrors!) some unknown graduate student rather than a famous expert — is far less embarrassing than having a deployed system fall to a malicious attacker. At least when both design and attacks are in public research literature, the designer can either fix any problems that are found or discard one approach and try something different. The hard part of security system design is not usually the cryptographic techniques that are used in the system. Designing a good cryptographic primitive — a block cipher, stream cipher or cryptographic hash — is indeed a tricky business, but for most applications designing new primitives is unnecessary. Good primitives are readily available; see the linked articles. The hard parts are fitting them together into systems and managing those systems to actually achieve security goals. Schneier's preface to Secrets and Lies [7] discusses this in some detail. His summary:

For links to several papers on the difficulties of cryptography, see our bibliography. Then there is the optimism of programmers. As for databases and real-time programming, cryptography looks deceptively simple. The basic ideas are indeed simple and almost any programmer can fairly easily implement something that handles straightforward cases. However, as in the other fields, there are also some quite tricky aspects to the problems and anyone who tackles the hard cases without both some study of relevant theory and considerable practical experience is almost certain to get it wrong. This is demonstrated far too often. For example, companies that implement their own cryptography as part of a product often end up with something that is easily broken. Examples include the addition of encryption to products like Microsoft Office [8], Netscape [9], Adobe's Portable Document Format (PDF) [10], and many others. Generally, such problems are fixed in later releases. These are major companies and both programmers and managers on their product teams are presumably competent, but they routinely get the cryptography wrong. Even when they use standardised cryptographic protocols, they may still mess up the implementation and create large weaknesses. For example, Microsoft's first version of PPTP was vulnerable to a simple attack [11] because of an elementary error in implementation. There are also failures in products where encryption is central to the design. Almost every company or standards body that designs a cryptosystem in secret, ignoring Kerckhoffs' Principle, produces something that is easily broken. Examples include the Contents Scrambling System (CSS) encryption on DVDs, the WEP encryption in wireless networking, [12] and the A5 encryption in GSM cell phones [13]. Such problems are much harder to fix if the flawed designs are included in standards and/or have widely deployed hardware implementations; updating those is much more difficult than releasing a new software version. Beyond the real difficulties in implementing real products are some systems that both get the cryptography horribly wrong and make extravagant marketing claims. These are often referred to as snake oil, Principles and termsCryptography proper is the study of methods of encryption and decryption. Cryptanalysis or "codebreaking" is the study of how to break into an encrypted message without possession of the key. Methods of defeating cryptosystems have a long history and an extensive literature. Anyone designing or deploying a cryptosystem must take cryptanalytic results into account. Cryptology ("the study of secrets", from the Greek) is the more general term encompassing both cryptography and cryptanalysis. "Crypto" is sometimes used as a short form for any of the above. Codes versus ciphersIn common usage, the term "code" is often used to mean any method of encryption or meaning-concealment. In cryptography, however, code is more specific, meaning a linguistic procedure which replaces a unit of plain text with a code word or code phrase. For example, "apple pie" might replace "attack at dawn". Each code word or code phrase carries a specific meaning. A cipher (or cypher) is a system of algorithms for encryption and decryption. Ciphers operate at a lower level than codes, using a mathematical operation to convert understandable plaintext into unintelligible ciphertext. The meaning of the material is irrelevant; a cipher just manipulates letters or bits, or groups of those. A cipher takes as input a key and plaintext, and produces ciphertext as output. For decryption, the process is reversed to turn ciphertext back into plaintext. Ciphertext should bear no resemblance to the original message. Ideally, it should be indistinguishable from a random string of symbols. Any non-random properties may provide an opening for a skilled cryptanalyst. The exact operation of a cipher is controlled by a key, which is a secret parameter for the cipher algorithm. The key may be different every day, or even different for every message. By contrast, the operation of a code is controlled by a code book which lists all the codes; these are harder to change. Codes are not generally practical for lengthy or complex communications, and are difficult to do in software, as they are as much linguistic as mathematical problems. If the only times the messages need to name are "dawn", "noon", "dusk" and "midnight", then a code is fine; usable code words might be "John", "George", "Paul" and "Ringo". However, if messages must be able to specify things like 11:37 AM, a code is inconvenient. Also if a code is used many times, an enemy is quite likely to work out that "John" means "dawn" or whatever; there is no long-term security. An important difference is that changing a code requires retraining users or creating and (securely!) delivering new code books, but changing a cipher key is much easier. If an enemy gets a copy of your codebook (whether or not you are aware of this!), then the code becomes worthless until you replace those books. By contrast, having an enemy get one of your cipher machines or learn the algorithm for a software cipher should do no harm at all — see Kerckhoffs' Principle. If an enemy learns the key, that defeats a cipher, but keys are easily changed; in fact, the procedures for any cipher usage normally include some method for routinely changing the key. For the above reasons, ciphers are generally preferred in practice. Nevertheless, there are niches where codes are quite useful. A small number of codes can represent a set of operations known to sender and receiver. "Climb Mount Niikata" was a final order for the Japanese mobile striking fleet to attack Pearl Harbor, while "visit Aunt Shirley" could order a terrorist to trigger a chemical weapon at a particular place. If the codes are not re-used or foolishly chosen (e,g. using "flyboy" for an Air Force officer) and do not have a pattern (e.g. using "Lancelot" and "Galahad" for senior officers, making it easy for an enemy to guess "Arthur" or "Gawain"), then there is no information to help a cryptanalyst and the system is extremely secure. Codes may also be combined with ciphers. Then if an enemy breaks a cipher, much of what he gets will be code words. Unless he either already knows the code words or has enough broken messages to search for codeword re-use, the code may defeat him even if the cipher did not. For example, if the Americans had intercepted and decrypted a message saying "Climb Mount Niikata" just before Pearl Harbor, they would likely not have known its meaning. There are historical examples of enciphered codes or encicodes. There are also methods of embedding code phrases into apparently innocent messages; see steganography below. In military systems, a fairly elaborate system of code words may be used. KeyingWhat a cipher attempts to do is to replace a difficult problem, keeping messages secret, with a much more tractable one, managing a set of keys. Of course this makes the keys critically important. Keys need to be large enough and highly random; those two properties together make them effectively impossible to guess or to find with a brute force search. See cryptographic key for discussion of the various types of key and their properties, and random numbers below for techniques used to generate good ones. Kerckhoffs' Principle is that no system should be considered secure unless it can resist an attacker who knows all its details except the key. The most fearsome attacker is one with strong motivation, large resources, and few scruples; such an attacker will learn all the other details sooner or later. To defend against him takes a system whose security depends only on keeping the keys secret. More generally, managing relatively small keys — creating good ones, keeping them secret, ensuring that the right people have them, and changing them from time to time — is not remarkably easy, but it is at least a reasonable proposition in many cases. See key management for the techniques. However, in almost all cases, it is a bad idea to rely on a system that requires large things to be kept secret. Security through obscurity — designing a system that depends for its security on keeping its inner workings secret — is not usually a good approach. Nor, in most cases, are a one-time pad which needs a key as large as the whole set of messages it will protect, or a code which is only secure as long as the enemy does not have the codebook. There are niches where each of those techniques can be used, but managing large secrets is always problematic and often entirely impractical. In many cases, it is no easier than the original difficult problem, keeping the messages secret. Basic mechanismsIn describing cryptographic systems, the players are traditionally called Alice and Bob, or just A and B. We use these names throughout the discussion below. Secret key systemsUntil the 1970s, all (publicly known) cryptosystems used secret key or symmetric key cryptography methods. In such a system, there is only one key for a message; that key can be used either to encrypt or decrypt the message, and it must be kept secret. Both the sender and receiver must have the key, and third parties (potential intruders) must be prevented from obtaining the key. Symmetric key encryption may also be called traditional, shared-secret, secret-key, or conventional encryption. Historically, ciphers worked at the level of letters or groups of letters; see history of cryptography for details. Attacks on them used techniques based largely on linguistic analysis, such as frequency counting; see cryptanalysis. Types of modern symmetric cipherOn computers, there are two main types of symmetric encryption algorithm: A block cipher breaks the data up into fixed-size blocks and encrypts each block under control of the key. Since the message length will rarely be an integer number of blocks, there will usually need to be some form of "padding" to make the final block long enough. The block cipher itself defines how a single block is encrypted; modes of operation specify how these operations are combined to achieve some larger goal. A stream cipher encrypts a stream of input data by combining it with a pseudo-random stream of data; the pseudo-random stream is generated under control of the encryption key. To a great extent, the two are interchangeable; almost any task that needs a symmetric cipher can be done by either. In particular, any block cipher can be used as stream cipher in some modes of operation. In general, stream ciphers are faster than block ciphers, and some of them are very easy to implement in hardware; this makes them attractive for dedicated devices. However, which one is used in a particular application depends largely on the type of data to be encrypted. Oversimplifying slightly, stream ciphers work well for streams of data while block ciphers work well for chunks of data. Stream ciphers are the usual technique to encrypt a communication channel, for example in military radio or in cell phones, or to encrypt network traffic at the level of physical links. Block ciphers are usual for things like encrypting disk blocks, or network traffic at the packet level (see IPsec), or email messages (PGP). Another method, usable manually or on a computer, is a one-time pad. This works much like a stream cipher, but it does not need to generate a pseudo-random stream because its key is a truly random stream as long as the message. This is the only known cipher which is provably secure (provided the key is truly random and no part of it is ever re-used), but it is impractical for most applications because managing such keys is too difficult. Key managementMore generally, key management is a problem for any secret key system.