Multi-touch interface: Difference between revisions

imported>Pat Palmer mNo edit summary |

mNo edit summary |

||

| (64 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

{{subpages}} | {{subpages}} | ||

{{Image|Tom_Cruise.jpg|right|275px|Tom Cruise in Minority Report (2002) using gestures similar to those used in Multi-Touch Technology}} | {{Image|Tom_Cruise.jpg|right|275px|Tom Cruise in Minority Report (2002) using gestures similar to those used in Multi-Touch Technology}} | ||

A '''multi-touch interface''' is a human-computer | A '''multi-touch interface''' is a [[Human Computer Interface|human-computer interface]] allowing users to compute without input devices such as a mouse or mechanical keyboard. Instead, the user interacts with the computer by touching and making gestures on a specialized touch-sensitive display surface that can detect multiple points of contact (touches) and can recognize certain gestures. This differs from a classic laptop mouse pad or ATM machine that recognizes only one touch at a time. | ||

After several decades of research by universities, companies, and research groups, Multi-Touch Technology (MTT) exploded onto the commercial scene in 2007 with the release of both Apple's [[iPhone]] and [[Microsoft Surface]] tablets. Even before [[Smartphone|smart phones]] made multi-touch ubiquitous, films and television began depicting multi-touch in shows like [[CSI]], [[NCIS]], or [[Fringe]], where large wall ''touch'' displays are used to scan through criminal evidence. Multi-touch screens also began to be used by weathermen, [[ESPN]] (March Madness Selection Sunday), and news reporters (2008 Presidential Election). The application of multi-touch is now expanding rapidly across a variety of industries. | |||

{{TOC|center}} | |||

== How it works == | |||

Multi-touch systems may use a touch screen, table, wall, or touch pad. The screen may detect touch points and movement by a variety of means, including heat, finger pressure, high capture rate cameras, infrared light, optic capture, and shadow capture. The screens must be supplemented by a signal-processing microcomputer ("controller"), as well as special software for handling the touch events. A multi-touch screen typically replaces a mouse and keyboard, instead providing: | |||

::*A virtual ''soft'' keyboard for input of text and numbers. | |||

::*Ability to simulate a mouse by dragging a finger across the display. | |||

::*Certain gestures that have specific application functionality (i.e. the pinch which zooms in and out). | |||

The screen surface detects touches and sends a signal to the controller, which filters noise and determines pressure, speed, and direction. The controller also performs [[analog-to-digital conversion]] and sends the digital outputs to the software, which usually present an event-driven [[application programming interface]] to programmers. | |||

Surface detection of touches can be implemented with various hardware approaches, including capacitive, resistive, infrared, and surface acoustic wave sensing mediums. This article currently focuses on capacitive and resistive, which are the types of technology used in mobile phones. | |||

=== Capacitive Touch Screens === | === Capacitive Touch Screens === | ||

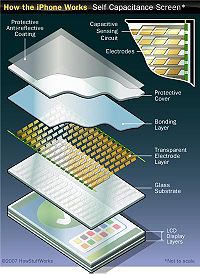

{{Image|MutualCapacitance.jpg|right|200px|How an iPhone capacitive screen works}} | |||

{{Image|MutualCapacitance.jpg|right| | |||

Capacitance-sensing multi-touch screens are the most prevalent implementation behind multi-touch cell phones.<ref name=Williams>Williams, Andrew. “Touchscreen lowdown -- Capacitive vs. Resistive” Web. 4. Aug 2010<http://www.knowyourmobile.com/features/392510/touchscreen_lowdown_capacitive_vs_resistive.html ></ref>. There are a number of technologies within the capacitance-sensing family to detect multi-touch but, in smart phones, mutual capacitance sensing is the most dominant<ref name=Williams />. The technology works by having a capacitive material whose local electrical charge gets changed when touched by your finger (or any other conductive material). | Capacitance-sensing multi-touch screens are the most prevalent implementation behind multi-touch cell phones.<ref name=Williams>Williams, Andrew. “Touchscreen lowdown -- Capacitive vs. Resistive” Web. 4. Aug 2010<http://www.knowyourmobile.com/features/392510/touchscreen_lowdown_capacitive_vs_resistive.html ></ref>. There are a number of technologies within the capacitance-sensing family to detect multi-touch but, in smart phones, mutual capacitance sensing is the most dominant<ref name=Williams />. The technology works by having a capacitive material whose local electrical charge gets changed when touched by your finger (or any other conductive material). | ||

| Line 40: | Line 27: | ||

=== Resistive Touch Screens === | === Resistive Touch Screens === | ||

Resistive sensing multi-touch screens are growing in prominence for several reasons: | Resistive sensing multi-touch screens are growing in prominence for several reasons: | ||

::*They allow the user to use gloves | ::*They allow the user to use gloves | ||

| Line 52: | Line 38: | ||

=== Surface Acoustic Wave Sensing Touch Screens === | === Surface Acoustic Wave Sensing Touch Screens === | ||

== | == Legal battles == | ||

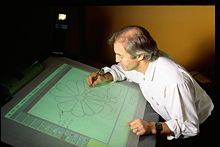

{{Image|BillActiveDesk.jpg|right|220px|Bill Buxton using ActiveDesk (1991-1994)}} | {{Image|BillActiveDesk.jpg|right|220px|Bill Buxton using ActiveDesk (1991-1994)}} | ||

Several different researchers and research groups worked on multi-touch technology simultaneously over the years, each approaching the problem in very similar ways (though with some differences) and building continuously on advances made by others. Significant inventions instrumental in the development of multi-touch technology date back to the 1970’s. A broad history of the technology is available on [[Bill Buxton|Bill Buxton's]] website<ref name=Buxton >Buxton, Bill. "Multi-Touch Systems That I Have Known and Loved." [[Bill Buxton]]. [[Bill Buxton]], 12 Jan. 2007. Web. 2 Aug. 2010. <http://www.billbuxton.com/multitouchOverview.html></ref>. Buxton is well-known in human computer interaction research, and his history of touch technology is frequently cited. | |||

For the purposes of this article, we may jump ahead to 2007, when two commercially successful multi-touch devices were introduced, the Apple [[iPhone]] and [[Microsoft Surface]]. The iPhone can detect two simultaneous touches and recognize basic gestures, including the ''pinch'' and the ''swipe''. It uses a capacitive-sensing touch screen<ref>iPhone Design. Web 8 Aug. 2010. <http://www.apple.com/iphone/design/></ref><ref name=Buxton />, and its marketplace success was integral in making multi-touch technology ubiquitous. Microsoft Surface is a table surface able to sense multiple user touches and gestures simultaneously. It uses optical technology to detect input<ref>What is Microsoft Surface. Web. 8 Aug 2010. <http://www.microsoft.com/surface/en/us/Pages/Product/WhatIs.aspx ></ref>, and because of its price($14,000), it has been available mainly in corporate settings. | |||

It is well documented that [[Myron Krueger]] was already using the hand gestures we think of as commonplace today (pinching to zoom/scale and resize, etc.)<ref name =Buxton /> in 1983. It is unclear who came up with the idea for these gestures, but many research groups began using them in their own multi-touch implementations long before [[Apple Inc.]] released the [[iPhone]] in 2007. But concurrent with iPhone's release, Apple submitted patents for many of the gestures, as well as the multi-touch technology that researchers had been using over the years. Although Apple may have been the first company to file the ideas with the [[U.S. Patent Office]], the patent rights are still not settled due to the long-standing use of the gestures and many of the technologies. | |||

In 2010, numerous companies are producing smartphones with multi-touch screens, many of which are looking more and more similar to the [[iPhone]] and respond of the same gestures. This was bound to result in litigation. The following list of lawsuits is not comprehensive but is intended to provide a sampling of the type of legal claims being made: | |||

In | |||

=== Apple’s Lawsuit: Apple vs. HTC === | === Apple’s Lawsuit: Apple vs. HTC === | ||

On March 2, 2010 [[Apple Inc.]] filed suit against [[HTC]] Corp. for violating more 20 of Apple’s Patents related to the [[iPhone]] and other Apple Products (U.S. Patent Numbers 7,362,331; 7,479,949; 7,657,849; 7,469,381; 5,920,726; 7,633,076; 5,848,105; 7,383,453; 5,455,599; 6,424,354; 5,481,721; 5,519,867; 5,556,337; 5,929,852; 5,946,647; 5,969,705; 6,275,983; 6,343,263; 5,915,131; and RE39,486)<ref>Apple Inc. vs High Tech Computer Corp., A/k/a HTC Corp., HTC(B.V.I.) Corp., HTC America Inc., Exedea, Inc. The United States District Court For The District of Delaware. 02 Mar. 2010. Print. </ref> . The patents listed in the lawsuit cover a wide variety of technologies including, but not limited to, touch screen command determination using heuristics, unlocking a device with a gesture, display rotation, camera power management, and signal processing. Many news and technology sources have speculated that this is more of a shot at [[Google]]/[[Android]] than it is at [[HTC]]. Though none of these patents are for multi-touch directly, many of them are for gestures and gesture based capabilities provided by Apple Technology. This court case has yet to see a courtroom. | On March 2, 2010 [[Apple Inc.]] filed suit against [[HTC]] Corp. for violating more 20 of Apple’s Patents related to the [[iPhone]] and other Apple Products (U.S. Patent Numbers 7,362,331; 7,479,949; 7,657,849; 7,469,381; 5,920,726; 7,633,076; 5,848,105; 7,383,453; 5,455,599; 6,424,354; 5,481,721; 5,519,867; 5,556,337; 5,929,852; 5,946,647; 5,969,705; 6,275,983; 6,343,263; 5,915,131; and RE39,486)<ref>Apple Inc. vs High Tech Computer Corp., A/k/a HTC Corp., HTC(B.V.I.) Corp., HTC America Inc., Exedea, Inc. The United States District Court For The District of Delaware. 02 Mar. 2010. Print. </ref> . The patents listed in the lawsuit cover a wide variety of technologies including, but not limited to, touch screen command determination using heuristics, unlocking a device with a gesture, display rotation, camera power management, and signal processing. Many news and technology sources have speculated that this is more of a shot at [[Google]]/[[Android]] than it is at [[HTC]]. Though none of these patents are for multi-touch directly, many of them are for gestures and gesture based capabilities provided by Apple Technology. This court case has yet to see a courtroom. | ||

=== HTC Counters: HTC vs. Apple === | === HTC Counters: HTC vs. Apple === | ||

On May 12, 2010, [[HTC]] filed a countersuit against [[Apple Inc.]] citing 5 of HTC’s patents (U.S. Patent Numbers 6,999,800; 7,716,505; 5,541,988; 6,058,183; and 6,320,957)<ref>Krause, Kevin. "The Five Patents in Question in the HTC v. Apple Countersuit." Phandroid. 12 May 2010. Web. 3 Aug. 2010. <http://phandroid.com/2010/05/12/the-five-patents-in-question-in-the-htc-v-apple-countersuit/> </ref>. Only one of these patents (6,058,183) relates to multi-touch technology; however, the lawsuit probably would not have come about if the original lawsuit by Apple was not filed. This case, like the other, has yet to see a courtroom. | On May 12, 2010, [[HTC]] filed a countersuit against [[Apple Inc.]] citing 5 of HTC’s patents (U.S. Patent Numbers 6,999,800; 7,716,505; 5,541,988; 6,058,183; and 6,320,957)<ref>Krause, Kevin. "The Five Patents in Question in the HTC v. Apple Countersuit." Phandroid. 12 May 2010. Web. 3 Aug. 2010. <http://phandroid.com/2010/05/12/the-five-patents-in-question-in-the-htc-v-apple-countersuit/> </ref>. Only one of these patents (6,058,183) relates to multi-touch technology; however, the lawsuit probably would not have come about if the original lawsuit by Apple was not filed. This case, like the other, has yet to see a courtroom. | ||

===Elan Microelectronics files a complaint with ITC === | ===Elan Microelectronics files a complaint with ITC === | ||

On March 29, 2010, [[Elan Microelectronics]] (EMG) filed a complaint with the [[International Trade Commission]] over Apple’s use of multi-touch technology in just about all of its products. The patent in question is U.S. Patent Number 5,825,352: “Multiple Fingers Contact Sensing Method for Emulating Mouse Buttons and Mouse Operations on a Touch Sensor Pad”<ref>Bisset, Stephen J., and Bernard Kasser. Multiple Fingers Contact Sensing Method for Touch Sensor Pad - Involves Indicating Simultaneous | On March 29, 2010, [[Elan Microelectronics]] (EMG) filed a complaint with the [[International Trade Commission]] over Apple’s use of multi-touch technology in just about all of its products. The patent in question is U.S. Patent Number 5,825,352: “Multiple Fingers Contact Sensing Method for Emulating Mouse Buttons and Mouse Operations on a Touch Sensor Pad”<ref>Bisset, Stephen J., and Bernard Kasser. Multiple Fingers Contact Sensing Method for Touch Sensor Pad - Involves Indicating Simultaneous | ||

Presence of Two Fingers in Response to Indication of Two Maximum Peaks. LOGITECH INC (LOGI-Non-standard), assignee. Patent | Presence of Two Fingers in Response to Indication of Two Maximum Peaks. LOGITECH INC (LOGI-Non-standard), assignee. Patent | ||

US5825352-A. 20 Oct. 1998. Print.</ref>. In EMG’s complaint, they have asked for a complete ban on the import of [[iPad]], [[iPhone]], [[iPod Touch]], [[MacBook]], and [[Magic Mouse]]<ref>Martin, Tim. "Cell Phone Multi-Touch Technology Lawsuits Continue." News Blaze. 7 Apr. 2010. Web. 3 Aug. 2010. <http://newsblaze.com/story/20100407135415tm75.nb/topstory.html></ref> . | US5825352-A. 20 Oct. 1998. Print.</ref>. In EMG’s complaint, they have asked for a complete ban on the import of [[iPad]], [[iPhone]], [[iPod Touch]], [[MacBook]], and [[Magic Mouse]]<ref>Martin, Tim. "Cell Phone Multi-Touch Technology Lawsuits Continue." News Blaze. 7 Apr. 2010. Web. 3 Aug. 2010. <http://newsblaze.com/story/20100407135415tm75.nb/topstory.html></ref> . | ||

== | == Barriers to adoption == | ||

There are some barriers preventing multi-touch technologies from becoming the method of interfacing with computer systems for everyone. Some of the more objective barriers, such as the high cost of these devices and the lagging hardware support for high performance software, may be alleviated with time. Military and industrial use, for example, is currently limited where durability and ruggedness is required or where quick, cheap repairs are necessary. These objective barriers are typical of any nascent technology; while the cost may always remain relatively high compared to a traditional input device (e.g., a keyboard or mouse), the prices are expected to fall as the technology matures and becomes more widely used. | |||

Other barriers are more subjective, including the relative complexity of learning to use multi-touch interfaces, as compared with simpler input devices. Many users prefer the tactile feel of traditional keyboards and text input devices. Reasons for this preference are so diverse that a one-size-fits-all approach to solving such a broad problem is almost always inadequate. Some users perceive their typing to be faster if they receive physical feedback after each key press. Developers have attempted to rectify this problem by delivering [[haptic feedback]] (a vibration under the point of contact when input is successfully received). Others enjoy the ability to type or draw while the display area remains in their peripheral vision. This benefit is lost particularly for smartphones, where all input and output take place on a single screen. There is also a large segment of handicapped computer users that are reliant on the physical layout of their input device. | |||

An increasing number of attachment devices are becoming available to compliment the multi-touch interface, thus allowing users to switch between the touch screen and traditional methods of data input. Multi-touch may not become the exclusive interface to all computers, but it will certainly continue to grow for some time to come. | |||

== Future uses == | |||

The possibilities for the uses of multi-touch are endless. Through the years we have seen futuristic versions of multi-touch in television and on the big screen; but when are those things going to be real for the rest of us? In this section I will mention a few sectors where I believe that multi-touch is going to have a big impact in the future. | The possibilities for the uses of multi-touch are endless. Through the years we have seen futuristic versions of multi-touch in television and on the big screen; but when are those things going to be real for the rest of us? In this section I will mention a few sectors where I believe that multi-touch is going to have a big impact in the future. | ||

===Education=== | ===Education=== | ||

It is possible that touch-screen technology might replace chalk and white boards, with their particulate and chemical air contaminants, in classrooms. While the initial outlay for such technology would likely be hefty, costs for consumable supplies such as chalk, markers, projection bulbs, erasers, etc. would be eliminated and the time to restock supplies or find shared projectors would be saved. Touch screen displays could provide teachers with improved functionality, responsiveness, and comfort. The acquisition costs as well as the environmental pollution from manufacturing and disposal would need to be factored into such decisions for future educational use. | |||

===Military=== | ===Military=== | ||

The [[military]] requires multi-touch technology that is more durable than for civilian use. [[HP]] just has released a multi-touch enabled notebook that is “engineered to meet the tough [[MIL-STD 810G]] military-standard tests for vibration, dust, humidity, altitude, and high and low temperatures.”<ref>Hp. HP Unveils Ultra-thin, Touch-enabled Convertible Tablet and Notebook PCs for Small and Midsize Businesses. News Release. Hp, 1 Mar. 2010. Web. 3 Aug. 2010. <http://www.hp.com/hpinfo/newsroom/press/2010/100301xa.html></ref> As ruggedized multi-touch technology becomes more available, troops will be more inclined to purchase these products, and governments may be more willing to issue multi-touch devices for regulation use. | |||

===Health Care=== | ===Health Care=== | ||

Anyone who has been to a doctor’s office or hospital has seen the massive amount of paper and [[x-ray]] sheets that are used on a daily basis. Doctors and nurses are moving rapidly towards computer use for electronic viewing of patient medical histories and for entry of notes about patient visits. Multi-touch [[Tablet PC]]s and [[iPad]]s are in the “testing” phase with a number of hospitals. According to [[John Halamka]] of [[Harvard Medical School]], “the combination of lower hardware acquisition costs and relative lack of learning curve (since many people already have smartphones) could foster widespread adoption of the iPad in health-care settings and pave the way for electronic heath records to become the norm.”<ref>White, Martha C. "The Essential Source for Business and Economic Policy News Post Politics More From The Big Money » With the iPad, Apple May Just Revolutionize Medicine." The Washington Post. 11 Apr. 2010. Web. 3 Aug. 2010. | |||

<http://www.washingtonpost.com/wp-dyn/content/article/2010/04/09/AR2010040906341.html></ref>. Concerns of whether chemical-sensitive touch screens can be adequately disinfected if contaminated by ill patients have not been resolved, although the use of disposable plastic barriers, or other strategies, has been proposed. | |||

== Software == | |||

{{Image|Multitouchcontrol.gif|right|220px|Multi-touch signal processing and recognition}} | |||

Multi-touch interfaces must inherently allow concurrency in the way they detect user input. Concurrency allows for multiple points of contact on the hardware surface to all be simultaneously detected and interpreted at various stages by the software engineer. The handling of concurrency is typically a low-level process performed by the operating system through interrupts, polling, or signals. The operating system can subsequently package this information in an easily accessible fashion through the application programming interface (API). This abstraction is the key to the relative simplicity of programming for multiple points of contact. | |||

The “Observer” design pattern is a software design pattern used by many APIs to handle user interface interaction, and it is applied to multi-touch interfaces in a similar fashion. It allows a programmer to designate an object, in this case the touch interface, and observers that will be informed of designated changes to the object by activating an observer’s specified method. Instead of handling multiple threads or processes for each point of contact, the operating system provides a single stream of fast-paced, but sequential, “events” to the object’s observers. These events each allow access to information about the state of the touch interface. Through the observers, the software engineer can decide what to do with each event by extracting information from them. The type of information that could be extracted includes the particular point of contact and its position, timing, inertia, etc. In this sense, multi-touch programming is very similar to single-touch programming except that the programmers must keep track of which point of contact they are handling at any given time. The programmer can choose to handle as few or as many points of contact as their application needs. However, the maximum number of simultaneous contact points is likely constrained by the device’s firmware due to hardware limitations. | |||

Many APIs provide additional abstraction in a variety of ways. A popular approach to simplifying multi-touch interaction comes in the form of pre-defined shapes or patterns typically referred to as “gestures”. Android 1.6 introduced a gestures package which provides a statistical approach to demonstrating if a pattern drawn on the touch interface by a user is equivalent to any member of a pre-defined set. The feasibility score is due to the inexactness of this form of communication between the user and the application. Windows 7 provides similar gestural functionality and even allows legacy applications to benefit from touch interfacing by handling some events if they were not interpreted by the application. This approach allows Windows to augment the intuitiveness of your application by allowing interactions such as pushing buttons, moving windows, and panning on scrollable views. Letting Windows handle these intuitive events has the additional benefit of presenting uniform behavior across software applications. | |||

== References == | == References == | ||

{{reflist|2}} | {{reflist|2}}[[Category:Suggestion Bot Tag]] | ||

Latest revision as of 16:01, 21 September 2024

A multi-touch interface is a human-computer interface allowing users to compute without input devices such as a mouse or mechanical keyboard. Instead, the user interacts with the computer by touching and making gestures on a specialized touch-sensitive display surface that can detect multiple points of contact (touches) and can recognize certain gestures. This differs from a classic laptop mouse pad or ATM machine that recognizes only one touch at a time.

After several decades of research by universities, companies, and research groups, Multi-Touch Technology (MTT) exploded onto the commercial scene in 2007 with the release of both Apple's iPhone and Microsoft Surface tablets. Even before smart phones made multi-touch ubiquitous, films and television began depicting multi-touch in shows like CSI, NCIS, or Fringe, where large wall touch displays are used to scan through criminal evidence. Multi-touch screens also began to be used by weathermen, ESPN (March Madness Selection Sunday), and news reporters (2008 Presidential Election). The application of multi-touch is now expanding rapidly across a variety of industries.

How it works

Multi-touch systems may use a touch screen, table, wall, or touch pad. The screen may detect touch points and movement by a variety of means, including heat, finger pressure, high capture rate cameras, infrared light, optic capture, and shadow capture. The screens must be supplemented by a signal-processing microcomputer ("controller"), as well as special software for handling the touch events. A multi-touch screen typically replaces a mouse and keyboard, instead providing:

- A virtual soft keyboard for input of text and numbers.

- Ability to simulate a mouse by dragging a finger across the display.

- Certain gestures that have specific application functionality (i.e. the pinch which zooms in and out).

The screen surface detects touches and sends a signal to the controller, which filters noise and determines pressure, speed, and direction. The controller also performs analog-to-digital conversion and sends the digital outputs to the software, which usually present an event-driven application programming interface to programmers.

Surface detection of touches can be implemented with various hardware approaches, including capacitive, resistive, infrared, and surface acoustic wave sensing mediums. This article currently focuses on capacitive and resistive, which are the types of technology used in mobile phones.

Capacitive Touch Screens

Capacitance-sensing multi-touch screens are the most prevalent implementation behind multi-touch cell phones.[1]. There are a number of technologies within the capacitance-sensing family to detect multi-touch but, in smart phones, mutual capacitance sensing is the most dominant[1]. The technology works by having a capacitive material whose local electrical charge gets changed when touched by your finger (or any other conductive material).

According to Apple’s patent (US 7,663,607 B2), in Mutual capacitance there is an electrically conducting medium like Indium Tin Oxide (ITO) which is arranged in the following set up. The medium is in an array formed by two different layers. Driving lines form the first layer, and sensing lines, which usually run perpendicular to the driving lines, form the second layer. The driving lines are connected to a voltage source and the sensing lines to forming a capacitive sensing circuit. During a touch, the local electrical properties of the screen change and are detected by the nodes[2].

Perhaps the main limitation to this approach is that it can’t be used in situations where the user has to wear gloves. Another limitation is its expense. Limitations aside, it has a high-resolution, high-clarity, and is not affected by environment. It should be noted that on February 16, 2010 Apple was awarded a patent for capacitive multi-touch which covers two implementations: single and mutual capacitance (patent no, US 7,663,607 B2).

Resistive Touch Screens

Resistive sensing multi-touch screens are growing in prominence for several reasons:

- They allow the user to use gloves

- The technology is significantly less expensive

- The devices have lower power consumption than their peers.

Resistive multi-touch screens consist of two glass or acrylic panels that are coated with a conductive material like Indium Tin Oxide and are separated by a narrow gap. When the user touches the display, the conductive layers are connected establishing an electrical current which is then measured and processed by the controller. A limitation of this technology is that it is not as robust as its capacitive counterpart. Currently, attempts to add additional screen protection limit device functionality, significantly limiting the ruggedness of the device.

Infrared Touch Screens

Surface Acoustic Wave Sensing Touch Screens

Legal battles

Several different researchers and research groups worked on multi-touch technology simultaneously over the years, each approaching the problem in very similar ways (though with some differences) and building continuously on advances made by others. Significant inventions instrumental in the development of multi-touch technology date back to the 1970’s. A broad history of the technology is available on Bill Buxton's website[3]. Buxton is well-known in human computer interaction research, and his history of touch technology is frequently cited.

For the purposes of this article, we may jump ahead to 2007, when two commercially successful multi-touch devices were introduced, the Apple iPhone and Microsoft Surface. The iPhone can detect two simultaneous touches and recognize basic gestures, including the pinch and the swipe. It uses a capacitive-sensing touch screen[4][3], and its marketplace success was integral in making multi-touch technology ubiquitous. Microsoft Surface is a table surface able to sense multiple user touches and gestures simultaneously. It uses optical technology to detect input[5], and because of its price($14,000), it has been available mainly in corporate settings.

It is well documented that Myron Krueger was already using the hand gestures we think of as commonplace today (pinching to zoom/scale and resize, etc.)[3] in 1983. It is unclear who came up with the idea for these gestures, but many research groups began using them in their own multi-touch implementations long before Apple Inc. released the iPhone in 2007. But concurrent with iPhone's release, Apple submitted patents for many of the gestures, as well as the multi-touch technology that researchers had been using over the years. Although Apple may have been the first company to file the ideas with the U.S. Patent Office, the patent rights are still not settled due to the long-standing use of the gestures and many of the technologies.

In 2010, numerous companies are producing smartphones with multi-touch screens, many of which are looking more and more similar to the iPhone and respond of the same gestures. This was bound to result in litigation. The following list of lawsuits is not comprehensive but is intended to provide a sampling of the type of legal claims being made:

Apple’s Lawsuit: Apple vs. HTC

On March 2, 2010 Apple Inc. filed suit against HTC Corp. for violating more 20 of Apple’s Patents related to the iPhone and other Apple Products (U.S. Patent Numbers 7,362,331; 7,479,949; 7,657,849; 7,469,381; 5,920,726; 7,633,076; 5,848,105; 7,383,453; 5,455,599; 6,424,354; 5,481,721; 5,519,867; 5,556,337; 5,929,852; 5,946,647; 5,969,705; 6,275,983; 6,343,263; 5,915,131; and RE39,486)[6] . The patents listed in the lawsuit cover a wide variety of technologies including, but not limited to, touch screen command determination using heuristics, unlocking a device with a gesture, display rotation, camera power management, and signal processing. Many news and technology sources have speculated that this is more of a shot at Google/Android than it is at HTC. Though none of these patents are for multi-touch directly, many of them are for gestures and gesture based capabilities provided by Apple Technology. This court case has yet to see a courtroom.

HTC Counters: HTC vs. Apple

On May 12, 2010, HTC filed a countersuit against Apple Inc. citing 5 of HTC’s patents (U.S. Patent Numbers 6,999,800; 7,716,505; 5,541,988; 6,058,183; and 6,320,957)[7]. Only one of these patents (6,058,183) relates to multi-touch technology; however, the lawsuit probably would not have come about if the original lawsuit by Apple was not filed. This case, like the other, has yet to see a courtroom.

Elan Microelectronics files a complaint with ITC

On March 29, 2010, Elan Microelectronics (EMG) filed a complaint with the International Trade Commission over Apple’s use of multi-touch technology in just about all of its products. The patent in question is U.S. Patent Number 5,825,352: “Multiple Fingers Contact Sensing Method for Emulating Mouse Buttons and Mouse Operations on a Touch Sensor Pad”[8]. In EMG’s complaint, they have asked for a complete ban on the import of iPad, iPhone, iPod Touch, MacBook, and Magic Mouse[9] .

Barriers to adoption

There are some barriers preventing multi-touch technologies from becoming the method of interfacing with computer systems for everyone. Some of the more objective barriers, such as the high cost of these devices and the lagging hardware support for high performance software, may be alleviated with time. Military and industrial use, for example, is currently limited where durability and ruggedness is required or where quick, cheap repairs are necessary. These objective barriers are typical of any nascent technology; while the cost may always remain relatively high compared to a traditional input device (e.g., a keyboard or mouse), the prices are expected to fall as the technology matures and becomes more widely used.

Other barriers are more subjective, including the relative complexity of learning to use multi-touch interfaces, as compared with simpler input devices. Many users prefer the tactile feel of traditional keyboards and text input devices. Reasons for this preference are so diverse that a one-size-fits-all approach to solving such a broad problem is almost always inadequate. Some users perceive their typing to be faster if they receive physical feedback after each key press. Developers have attempted to rectify this problem by delivering haptic feedback (a vibration under the point of contact when input is successfully received). Others enjoy the ability to type or draw while the display area remains in their peripheral vision. This benefit is lost particularly for smartphones, where all input and output take place on a single screen. There is also a large segment of handicapped computer users that are reliant on the physical layout of their input device.

An increasing number of attachment devices are becoming available to compliment the multi-touch interface, thus allowing users to switch between the touch screen and traditional methods of data input. Multi-touch may not become the exclusive interface to all computers, but it will certainly continue to grow for some time to come.

Future uses

The possibilities for the uses of multi-touch are endless. Through the years we have seen futuristic versions of multi-touch in television and on the big screen; but when are those things going to be real for the rest of us? In this section I will mention a few sectors where I believe that multi-touch is going to have a big impact in the future.

Education

It is possible that touch-screen technology might replace chalk and white boards, with their particulate and chemical air contaminants, in classrooms. While the initial outlay for such technology would likely be hefty, costs for consumable supplies such as chalk, markers, projection bulbs, erasers, etc. would be eliminated and the time to restock supplies or find shared projectors would be saved. Touch screen displays could provide teachers with improved functionality, responsiveness, and comfort. The acquisition costs as well as the environmental pollution from manufacturing and disposal would need to be factored into such decisions for future educational use.

Military

The military requires multi-touch technology that is more durable than for civilian use. HP just has released a multi-touch enabled notebook that is “engineered to meet the tough MIL-STD 810G military-standard tests for vibration, dust, humidity, altitude, and high and low temperatures.”[10] As ruggedized multi-touch technology becomes more available, troops will be more inclined to purchase these products, and governments may be more willing to issue multi-touch devices for regulation use.

Health Care

Anyone who has been to a doctor’s office or hospital has seen the massive amount of paper and x-ray sheets that are used on a daily basis. Doctors and nurses are moving rapidly towards computer use for electronic viewing of patient medical histories and for entry of notes about patient visits. Multi-touch Tablet PCs and iPads are in the “testing” phase with a number of hospitals. According to John Halamka of Harvard Medical School, “the combination of lower hardware acquisition costs and relative lack of learning curve (since many people already have smartphones) could foster widespread adoption of the iPad in health-care settings and pave the way for electronic heath records to become the norm.”[11]. Concerns of whether chemical-sensitive touch screens can be adequately disinfected if contaminated by ill patients have not been resolved, although the use of disposable plastic barriers, or other strategies, has been proposed.

Software

Multi-touch interfaces must inherently allow concurrency in the way they detect user input. Concurrency allows for multiple points of contact on the hardware surface to all be simultaneously detected and interpreted at various stages by the software engineer. The handling of concurrency is typically a low-level process performed by the operating system through interrupts, polling, or signals. The operating system can subsequently package this information in an easily accessible fashion through the application programming interface (API). This abstraction is the key to the relative simplicity of programming for multiple points of contact.

The “Observer” design pattern is a software design pattern used by many APIs to handle user interface interaction, and it is applied to multi-touch interfaces in a similar fashion. It allows a programmer to designate an object, in this case the touch interface, and observers that will be informed of designated changes to the object by activating an observer’s specified method. Instead of handling multiple threads or processes for each point of contact, the operating system provides a single stream of fast-paced, but sequential, “events” to the object’s observers. These events each allow access to information about the state of the touch interface. Through the observers, the software engineer can decide what to do with each event by extracting information from them. The type of information that could be extracted includes the particular point of contact and its position, timing, inertia, etc. In this sense, multi-touch programming is very similar to single-touch programming except that the programmers must keep track of which point of contact they are handling at any given time. The programmer can choose to handle as few or as many points of contact as their application needs. However, the maximum number of simultaneous contact points is likely constrained by the device’s firmware due to hardware limitations.

Many APIs provide additional abstraction in a variety of ways. A popular approach to simplifying multi-touch interaction comes in the form of pre-defined shapes or patterns typically referred to as “gestures”. Android 1.6 introduced a gestures package which provides a statistical approach to demonstrating if a pattern drawn on the touch interface by a user is equivalent to any member of a pre-defined set. The feasibility score is due to the inexactness of this form of communication between the user and the application. Windows 7 provides similar gestural functionality and even allows legacy applications to benefit from touch interfacing by handling some events if they were not interpreted by the application. This approach allows Windows to augment the intuitiveness of your application by allowing interactions such as pushing buttons, moving windows, and panning on scrollable views. Letting Windows handle these intuitive events has the additional benefit of presenting uniform behavior across software applications.

References

- ↑ 1.0 1.1 Williams, Andrew. “Touchscreen lowdown -- Capacitive vs. Resistive” Web. 4. Aug 2010<http://www.knowyourmobile.com/features/392510/touchscreen_lowdown_capacitive_vs_resistive.html >

- ↑ Hotelling, S, et al. Multipoint touchscreen. Apple Inc. assignee. Patent US 7,663,607 B2. February 16, 2010.

- ↑ 3.0 3.1 3.2 Buxton, Bill. "Multi-Touch Systems That I Have Known and Loved." Bill Buxton. Bill Buxton, 12 Jan. 2007. Web. 2 Aug. 2010. <http://www.billbuxton.com/multitouchOverview.html>

- ↑ iPhone Design. Web 8 Aug. 2010. <http://www.apple.com/iphone/design/>

- ↑ What is Microsoft Surface. Web. 8 Aug 2010. <http://www.microsoft.com/surface/en/us/Pages/Product/WhatIs.aspx >

- ↑ Apple Inc. vs High Tech Computer Corp., A/k/a HTC Corp., HTC(B.V.I.) Corp., HTC America Inc., Exedea, Inc. The United States District Court For The District of Delaware. 02 Mar. 2010. Print.

- ↑ Krause, Kevin. "The Five Patents in Question in the HTC v. Apple Countersuit." Phandroid. 12 May 2010. Web. 3 Aug. 2010. <http://phandroid.com/2010/05/12/the-five-patents-in-question-in-the-htc-v-apple-countersuit/>

- ↑ Bisset, Stephen J., and Bernard Kasser. Multiple Fingers Contact Sensing Method for Touch Sensor Pad - Involves Indicating Simultaneous Presence of Two Fingers in Response to Indication of Two Maximum Peaks. LOGITECH INC (LOGI-Non-standard), assignee. Patent US5825352-A. 20 Oct. 1998. Print.

- ↑ Martin, Tim. "Cell Phone Multi-Touch Technology Lawsuits Continue." News Blaze. 7 Apr. 2010. Web. 3 Aug. 2010. <http://newsblaze.com/story/20100407135415tm75.nb/topstory.html>

- ↑ Hp. HP Unveils Ultra-thin, Touch-enabled Convertible Tablet and Notebook PCs for Small and Midsize Businesses. News Release. Hp, 1 Mar. 2010. Web. 3 Aug. 2010. <http://www.hp.com/hpinfo/newsroom/press/2010/100301xa.html>

- ↑ White, Martha C. "The Essential Source for Business and Economic Policy News Post Politics More From The Big Money » With the iPad, Apple May Just Revolutionize Medicine." The Washington Post. 11 Apr. 2010. Web. 3 Aug. 2010. <http://www.washingtonpost.com/wp-dyn/content/article/2010/04/09/AR2010040906341.html>